Table of Contents

In today’s fast-paced digital world, the debate about Edge Computing vs Cloud Computing is heating up, with both technologies offering unique benefits.

Imagine this: You’re in a self-driving car, cruising down the highway, when suddenly a pedestrian steps onto the road. Suddenly, your car has to make a choice: crash or take the wild swing? It can’t afford to wait for data to be shipped off to some remote server and back. In contrast, the choice is real-time, made on the fly at the scene.

This is the wonder of Edge Computing, where the processing of data occurs at the “edge” of the network, at the location where the data is consumed the most.

But then, picture this: You’re analyzing massive amounts of data from all over the world—streaming videos, social media posts, and business metrics. This requires intensive computing capabilities of Cloud Computing in which all data and computations are handled and processed in large data centers.

So, what happens when these two forces collide? The answer could shape the future of technology. Let’s dive into the showdown between Edge Computing vs. Cloud Computing and explore their strengths, weaknesses, and how they complement each other in the digital age.

What Are We Talking About?

What is Edge Computing?

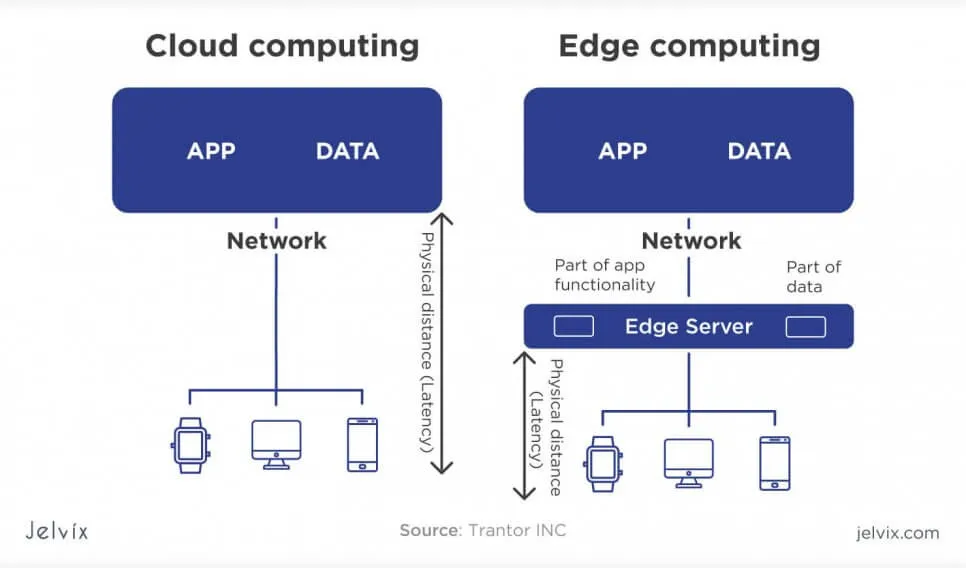

Edge computing is all about localization. It involves processing data closer to its source—whether that’s a device, a sensor, or even a smart vehicle. Wheretoquoever that data, however, is to be moved on the edge instead of sending data to the remote data center, edge computing performs that data at the site where it’s positioned. This further reduces latency (delay before data transfer starts) and increases speed, so it is well suited for real-time decision-making tasks.

What is Cloud Computing?

However, cloud computing, on the other hand, revolves around centralized computing. The data is transmitted from user devices to remote, large data centers for computation and storage. This model can help organizations grow their infrastructure without the high upfront cost of physical hardware. The cloud is widely employed for applications that demand significant computations and/or very large-scale storage solutions.

But Edge Computing vs Cloud Computing, which one wins? Let’s break it down.

5 Key Differences of Edge Computing vs Cloud Computing

When comparing Edge Computing vs Cloud Computing, it’s important to understand that both serve distinct purposes, but often complement each other in modern tech infrastructures. Below, we explore 5 key differences between Edge Computing vs Cloud Computing that highlight how these two technologies stack up against each other.

- Latency: The Need for Speed

In terms of responsiveness, latency is a key issue in this comparison of Edge Computing vs Cloud Computing

Edge Computing takes a win by performing the processing of data at the source. This results in low latency which makes it suitable for real-time applications. Gartner estimates that by 2025, 75% of enterprise data will be processed and accessed within, away from, central cloud data centers, and thus low latency for real-time applications will look and sound very different.

Cloud Computing, although excellent and efficient, is a problematic system due to its high latency because there is always a data transfer to/from a central server. A study by Cisco found that cloud computing is slower than edge computing due to the high data travel time, which is particularly noticeable in applications that require low latency like augmented reality or autonomous vehicles.

- Bandwidth: The Flow of Data

Bandwidth is a critical issue for the comparison of Edge Computing vs Cloud Computing.

Peak Computing reduces bandwidth load by performing computation at the edge. This minimizes network congestion and cuts down on bandwidth costs. As IDC pointed out, edge-based processing can optimize data transmission by up to 40%, a doorbell for places with poor network availability.

Cloud Computing needs high bandwidth for uploading and downloading data to/from centralized servers. Synergy Research found that cloud traffic is forecast to increase at a compound annual growth rate (CAGR) of 25-30% from 2020 to 2025, thereby increasing the requirement for even greater bandwidth as greater numbers of devices and applications move to cloud-based platforms.

- Security: Keeping Data Safe

Security implications are critical in the realm of data processing when comparing Edge Computing vs Cloud Computing

Edge Computing provides enhanced local security, as processing and storage of data occur locally to the source. Nevertheless, 451 Research points out that edge computing manifests novel security concerns because it mandates secure management of multiple edge devices, each of them potentially easily compromised. Securing edge devices can be complex but crucial to protecting data in transit.

Cloud Computing is well known for its strong security, including encryption and multi-factor authentication. According to a 2023 report from IBM, 95% of cloud providers implement advanced security protocols to protect against breaches, but cloud computing still faces risks during data transfer. Encryption, firewalls, and secure channels help mitigate these risks, but the vast amount of traffic in the cloud makes it a potential target for cyberattacks.

- Scalability: Growing with Your Needs

Scaling your infrastructure, Edge Computing vs Cloud Computing each provides a different edge.

Edge Computing is less scalable than the cloud because of the added requirement for an expanded, localized infrastructure of devices or hardware. For example, research conducted by Intel also indicated that increasing the number of edge devices in remote positions presents challenges to the task of managing the infrastructure. Although, edge computing is suitable for applications that need real-time processing but do not require large-scale scalability such as smart devices or industrial automation.

Cloud Computing excels in scalability. According to Forbes, the market for cloud services will be $832.1 billion by 2025, as companies are looking for easy-to-scale infrastructure. With cloud computing, adding more storage, computing power, or bandwidth can be done in a matter of minutes, providing almost unlimited scalability without requiring physical hardware.

- Use Cases: The Right Tool for the Job

The use of Edge Computing vs Cloud Computation varies greatly, depending on the task.

Edge Computing is perfect for real-time applications. Gartner estimates that by 2025, 90% of new enterprise applications will be developed using edge-computing functionalities, particularly in domains such as IoT, autonomous vehicles, and robotics where real-time decision-making is critical. For example, manufacturing edge computing enables predictive maintenance through on-site analysis and resolution of machine data thereby an avoidance of downtime due to the timely detection of events.

Cloud Computing is better suited for tasks that require large-scale data storage, complex analytics, and resource-intensive applications. A Microsoft analysis finds that 40% of enterprises have migrated the majority of their IT into the cloud and using it for data analytics, artificial intelligence tasks, and storage. Cloud computing fuels huge data lakes where enterprises can store big data and perform analytics at scale.

Challenges in Edge and Cloud Computing

Both Edge Computing vs Cloud Computing provide special benefits as well as special challenges, which enterprises need to deal with to exploit their full capacity.

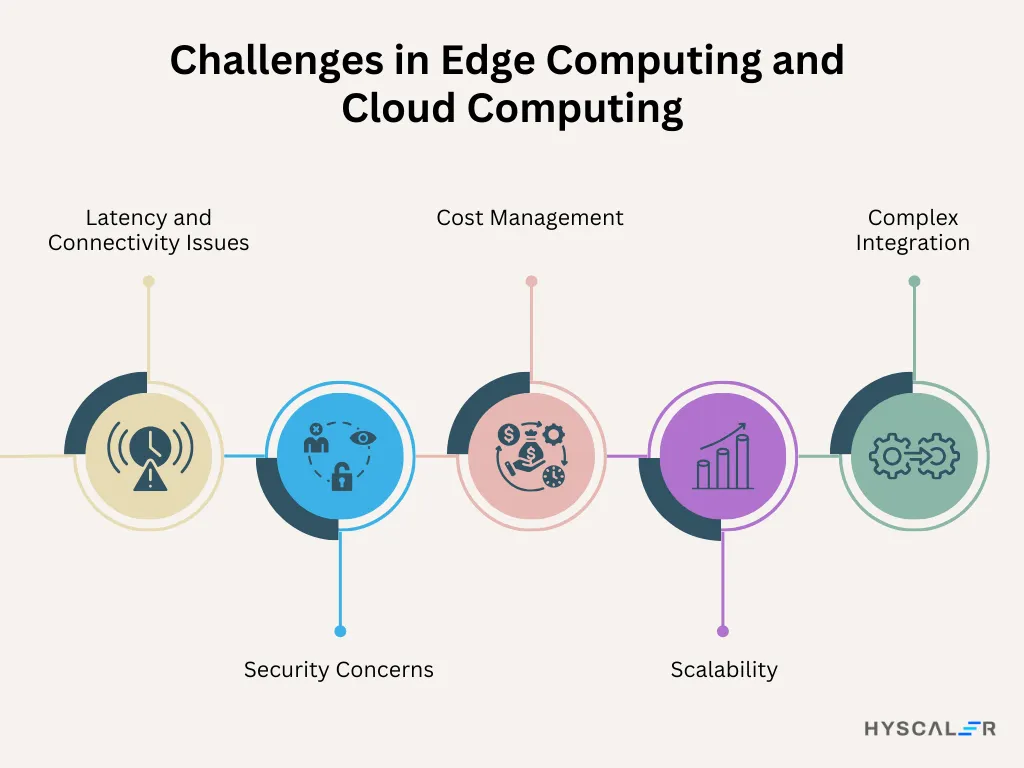

Latency and Connectivity Issues:

Edge computing is based on the decentralized network which can be a problem in sites with weak infrastructures. In the same way, cloud computing may be affected by latency while performing a heavy data transfer.

Security Concerns:

The management of a plurality of devices in an edge computing environment poses a higher risk to device security, thus data transfer is one of the top issues. Although cloud computing has sophisticated security mechanisms, it still is one of the targets of cyberattacks because it is centralized.

Cost Management:

Cloud computing is typically associated with calculable subscription fees but edge computing involves substantial upfront capital outlays for hardware and infrastructure. Balancing these costs can be a challenge for organizations.

Scalability:

Scalability is an advantage of cloud computing, but the efficiency of scalability for large networks, especially with IoT applications, may be a challenge for edge computing. Combining the two requires careful planning to avoid bottlenecks.

Complex Integration:

Implementing hybrid solutions that combine Edge Computing vs Cloud Computing with seamless integration is necessary but often complex and computationally intensive.

Conclusion: Edge Computing vs Cloud Computing? Or Both?

The debate between Edge Computing vs Cloud Computing isn’t about choosing one over the other—it’s about finding the right balance. Edge computing is well-suited for real-time decision-making and low latency, while cloud computing scales and provides immense storage and power for big data operations. Where applicable, the future can be found in the combination of technology and the resultant hybrid solutions that deliver speed, efficiency, and scalability.

With the increasing evolution of technology, industries also keep on developing new approaches to integrate these two trends. So, what’s your take? Are you team Edge or Cloud, or are you ready to embrace both for a futuristic hybrid solution? The choice between Edge Computing vs Cloud Computing depends on your needs, but the future of technology is knocking on our door every day and there is no limit to the applications! Ready to explore how Edge Computing vs Cloud Computing can transform your business? Dive into the future today!