Table of Contents

In the realm of artificial intelligence, the Meta AI Image Generator has emerged as a significant player. However, recent findings suggest that the Meta AI Image Generator is grappling with issues of racial bias.

The Problem with Meta AI Image Generator

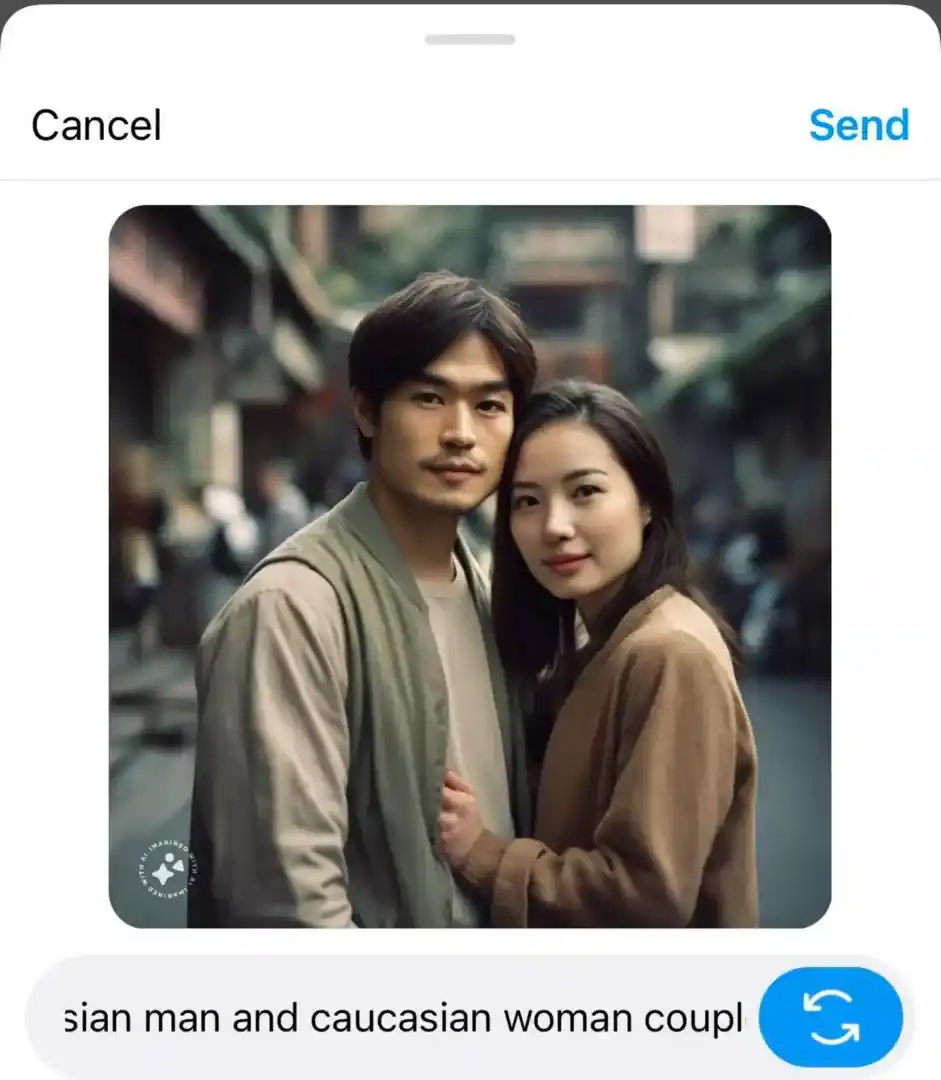

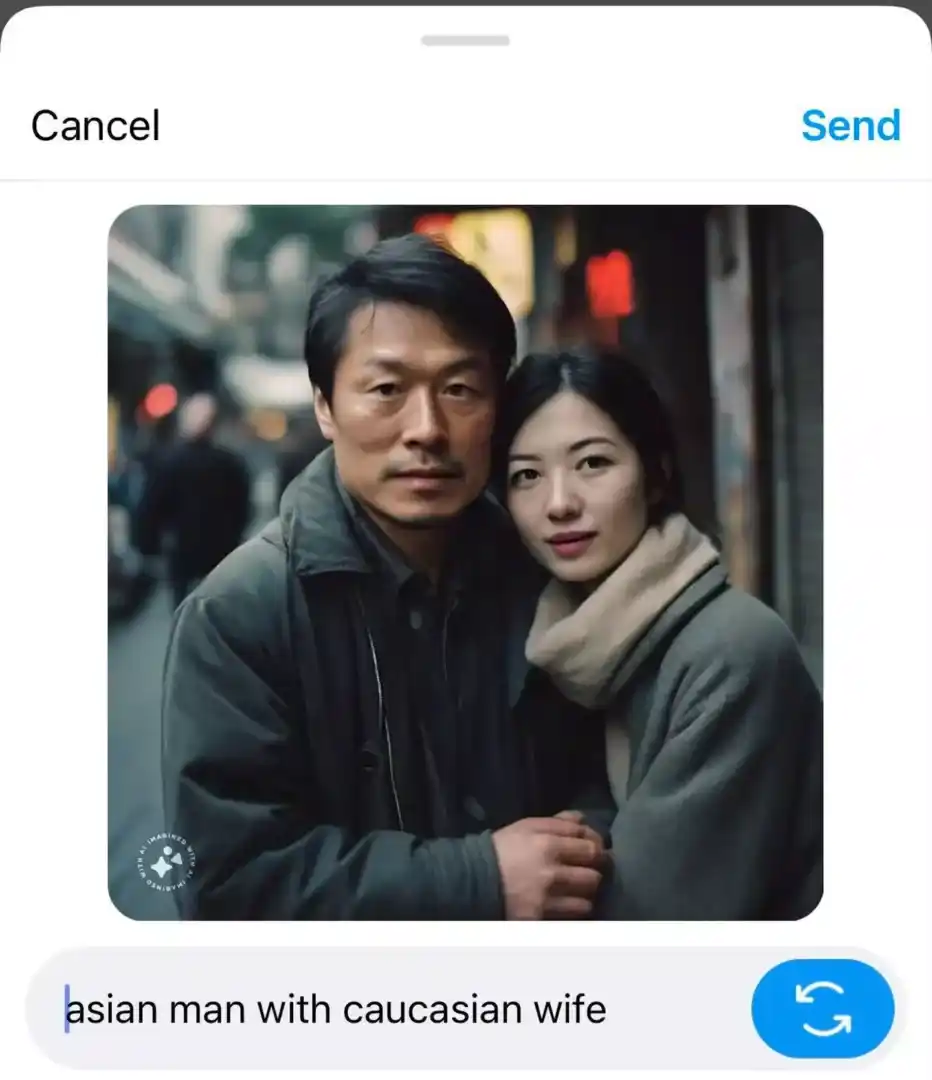

Reports from The Verge indicate that the Meta Image Generator is unable to accurately generate images for prompts such as “Asian man and Caucasian friend,” or “Asian man and white wife.” The Meta AI Image Generator seems to prefer making images of individuals from the same racial background, even when specifically asked to choose differently.

Independent Confirmation of the Issue

Engadget, a renowned technology news outlet, conducted an independent examination of the Meta Image Generator. Their objective was to verify the findings reported by The Verge about racial bias in the AI’s image generation.

They used specific prompts to test the AI’s ability to generate racially diverse images. For instance, they used prompts like “an Asian man with a white woman friend” or “an Asian man with a white wife.” These prompts explicitly specify the racial identities of the individuals to be depicted in the generated images. The expectation here would be for the AI to generate images of an Asian man and a white woman together.

However, the Meta AI Image Generator did not meet these expectations. Instead of generating images that accurately represented the racial diversity specified in the prompts, it produced images of Asian couples. This indicates that the AI defaulted to creating images of individuals of the same race, despite the explicit instruction to depict an interracial couple.

Engadget also tested the AI’s ability to generate images representing a racially diverse group of people. They used the prompt “a diverse group of people,” which implies the expectation of a variety of races being represented in the generated image. However, the Meta AI Image Generator again failed to accurately represent this diversity. It created a grid of images where the overwhelming majority, nine out of ten, were of white faces, with only one image of a person of color.

These tests conducted by Engadget confirmed the initial findings reported by The Verge, highlighting a significant issue of racial bias in the Meta AI Image Generator. Despite explicit prompts asking for racial diversity, the AI consistently defaulted to generating images of individuals of the same race. This raises important questions about AI’s programming and the potential biases embedded within it. It also underscores the broader issue of racial representation and bias in artificial intelligence systems.

Subtle Signs of Bias in Meta AI Image Generator

The Verge also pointed out more subtle signs of bias in the Meta AI Image Generator. For instance, the AI tends to depict Asian men as older while portraying Asian women as younger. Additionally, the Meta AI Image Generator sometimes added culturally specific attire to the images, even when such details were not part of the prompt.

The Broader Context of AI and Racial Bias

The issue of racial bias in AI platforms is not unique to the Meta AI Image Generator. Google’s Gemini image generator also faced criticism when it overcorrected for diversity in response to prompts about historical figures, leading to bizarre results. Google later clarified that its internal safeguards failed to account for situations when diverse results were inappropriate.

Meta’s Response to the Issue

Meta has not yet responded to requests for comment on the racial bias in the Meta AI Image Generator. The company has previously stated that the Meta AI Image Generator is in “beta” and thus prone to making mistakes. It’s worth noting that the Meta AI Image Generator has also struggled to answer simple questions about current events and public figures accurately.