Table of Contents

AI has impacted every corner of the world and is driving a transformation in every sector, with language models at the forefront of this transformation.

As organizations and developers seek the most suitable AI solutions for their specific needs, understanding the distinction between Large Language Models (LLMs) and Small Language Models (SLMs) has become crucial.

This comprehensive guide examines both model types, their characteristics, and applications, and provides guidance on selecting between them.

Understanding Large Language Models (LLMs)

Definition

Large Language Models (LLMs) are sophisticated artificial intelligence systems trained on massive datasets containing billions or trillions of parameters and data.

These models are designed to understand, generate, and manipulate human language with remarkable proficiency.

LLMs like GPT-4, Claude, PaLM, and LLaMA represent the current pinnacle of language AI technology, incorporating vast amounts of textual data from books, articles, websites, and other sources to develop a detailed understanding of context, language patterns, and meaning.

The “large” designation typically refers to models with billions of parameters, the adjustable weights that determine how the model processes and generates text.

Modern LLMs often contain anywhere from 7 billion to over 175 billion parameters, with some experimental models pushing into the trillions.

Key Characteristics of LLMs

LLMs are characterized by their massive scale, both in terms of model parameters and training data.

They demonstrate emergent capabilities that smaller models cannot achieve, such as complex reasoning, creative writing, code generation across multiple programming languages, and the ability to maintain coherent conversations across extended interactions.

They increase productivity with their huge capabilities.

These models typically require substantial computational resources for both training and inference, often necessitating specialized hardware like high-end GPUs or TPUs.

The training process for LLMs involves processing enormous corpora of text data, often measured in terabytes, using distributed computing systems over weeks or months.

This extensive training enables LLMs to capture nuanced patterns in language, cultural references, domain-specific knowledge, and complex relationships between concepts.

Understanding Small Language Models (SLMs)

Definition

Small Language Models (SLMs) are more compact versions of language AI systems, typically containing millions to low billions of parameters.

These models are designed to provide language processing capabilities while maintaining significantly lower computational requirements than their larger counterparts.

SLMs like DistilBERT, ALBERT, MobileBERT, and various fine-tuned versions of base models represent efficient approaches to natural language processing.

The focus of SLMs is on optimization and efficiency rather than raw capability.

They achieve this through various techniques, including knowledge distillation, parameter sharing, architectural innovations, and targeted training approaches that maximize performance within constrained resource budgets.

Key Characteristics of SLMs

SLMs prioritize efficiency and accessibility over maximum capability.

They are designed to run on standard hardware, including consumer-grade computers, mobile devices, and edge computing systems.

While they may not match the comprehensive capabilities of LLMs, SLMs excel in specific, well-defined tasks and can be highly effective when properly optimized for particular use cases.

The development philosophy behind SLMs emphasizes practical deployment considerations, including inference speed, memory usage, energy consumption, and the ability to operate in resource-constrained environments.

This makes them particularly attractive for real-world applications where computational resources are limited or cost is a primary concern.

Detailed Comparison: LLM vs SLM

Performance and Capabilities

Large Language Models demonstrate unrivaled performance across a broad range of difficult language tasks.

They are very good at tasks requiring deep contextual understanding, creative generation, complex reasoning, and handling vague or novel situations.

LLMs can engage in sophisticated and deep conversations, write in various styles and formats, solve complex problems, and demonstrate what appears to be a genuine understanding of subtle concepts.

Small Language Models, while more limited in scope, can achieve remarkable performance in specific domains or tasks for which they have been optimized.

When properly fine-tuned, SLMs can match or even exceed LLM performance in narrow applications while using a fraction of the computational resources.

Resource Requirements

The disparity in resources between large language models (LLMs) and small language models (SLMs) is quite evident.

LLMs typically require high-end hardware configurations, including multiple GPUs with significant VRAM, large system memory, and powerful processors.

Training LLMs demands even more resources, often requiring distributed computing clusters and major financial investment.

SLMs are designed to be resource-efficient, capable of running on standard consumer hardware, mobile devices, or simple cloud instances.

They require significantly less memory, processing power, and energy consumption, making them accessible to a broader range of users and applications.

Deployment and Accessibility

LLMs often require cloud-based deployment or specialized on-premises infrastructure, leading to large land requirements.

Many LLMs are accessed through APIs provided by major tech companies, which can introduce latency, ongoing costs, and dependency on external services.

However, API models also provide access to cutting-edge capabilities without requiring massive infrastructure investment.

SLMs can be deployed locally, on mobile devices, or in edge computing environments.

This local deployment offers advantages in terms of privacy, latency, offline capability, and long-term cost control, making it attractive for applications where data sensitivity or real-time processing is crucial.

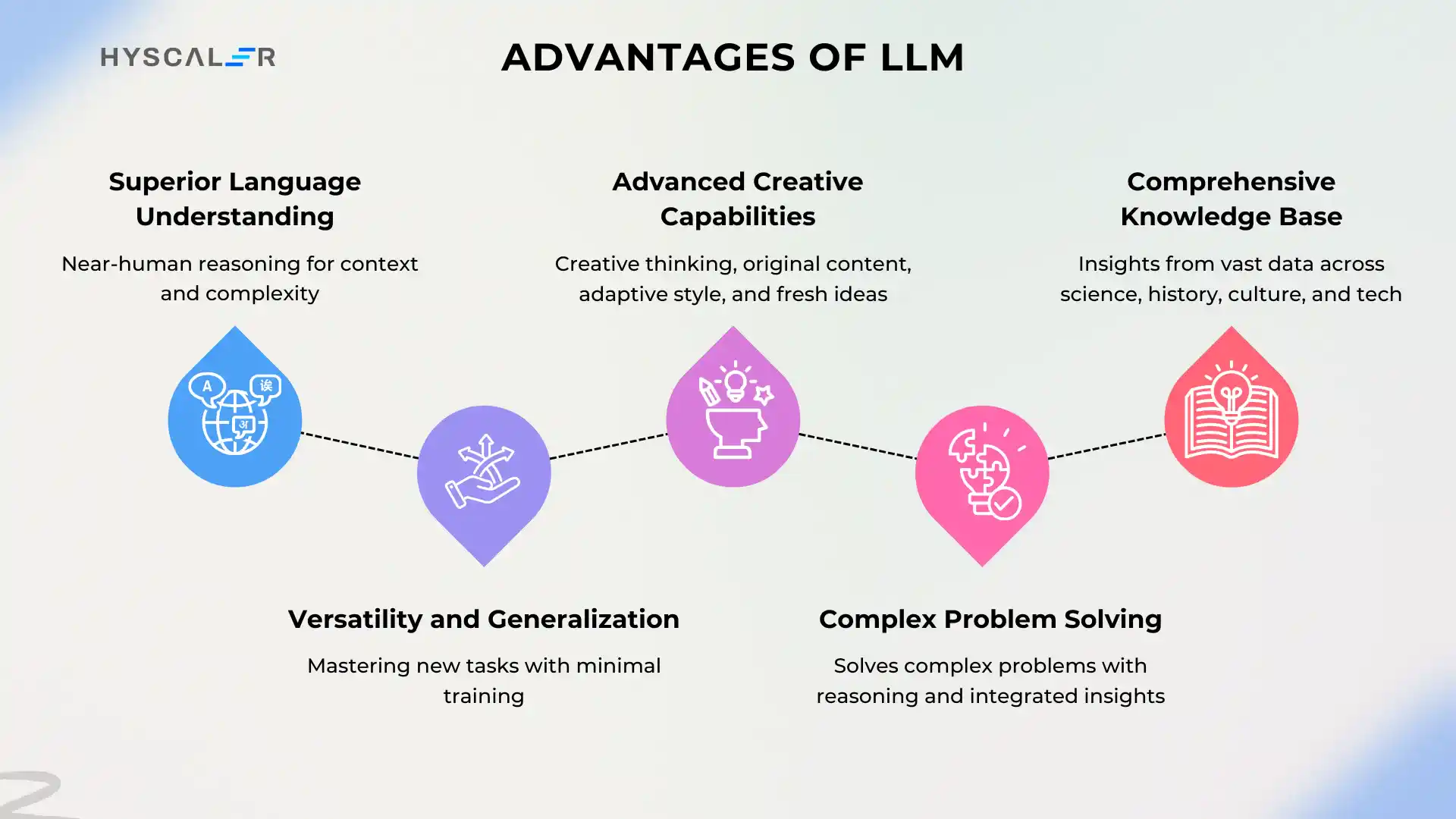

Advantages of Large Language Models (LLMs)

Superior Language Understanding

LLMs demonstrate exceptional comprehension of context, subtle, and implied meaning in text.

They can understand complex instructions, maintain coherent conversations across extended interactions, and demonstrate sophisticated reasoning capabilities that approach human-like understanding in many domains.

Versatility and Generalization

One significant advantage of LLMs is their capability to excel across various tasks without specific training.

This zero-shot and few-shot learning capability makes them incredibly versatile tools that can adapt to new challenges with minimal additional training or fine-tuning.

Advanced Creative Capabilities

LLMs excel at creative tasks, including writing, storytelling, poetry, content generation, and creating images.

They can produce original content in various styles, adapt their voice and tone to match specific requirements, and generate ideas that demonstrate genuine creativity and originality.

Complex Problem Solving

These models can tackle sophisticated problems requiring multi-step reasoning, integration of information from multiple sources, and the ability to consider various perspectives and approaches to reach solutions.

Comprehensive Knowledge Base

LLMs are trained on vast datasets covering human knowledge across numerous domains, making them capable of providing information and insights across science, history, culture, technology, and countless other fields you might have no clue about.

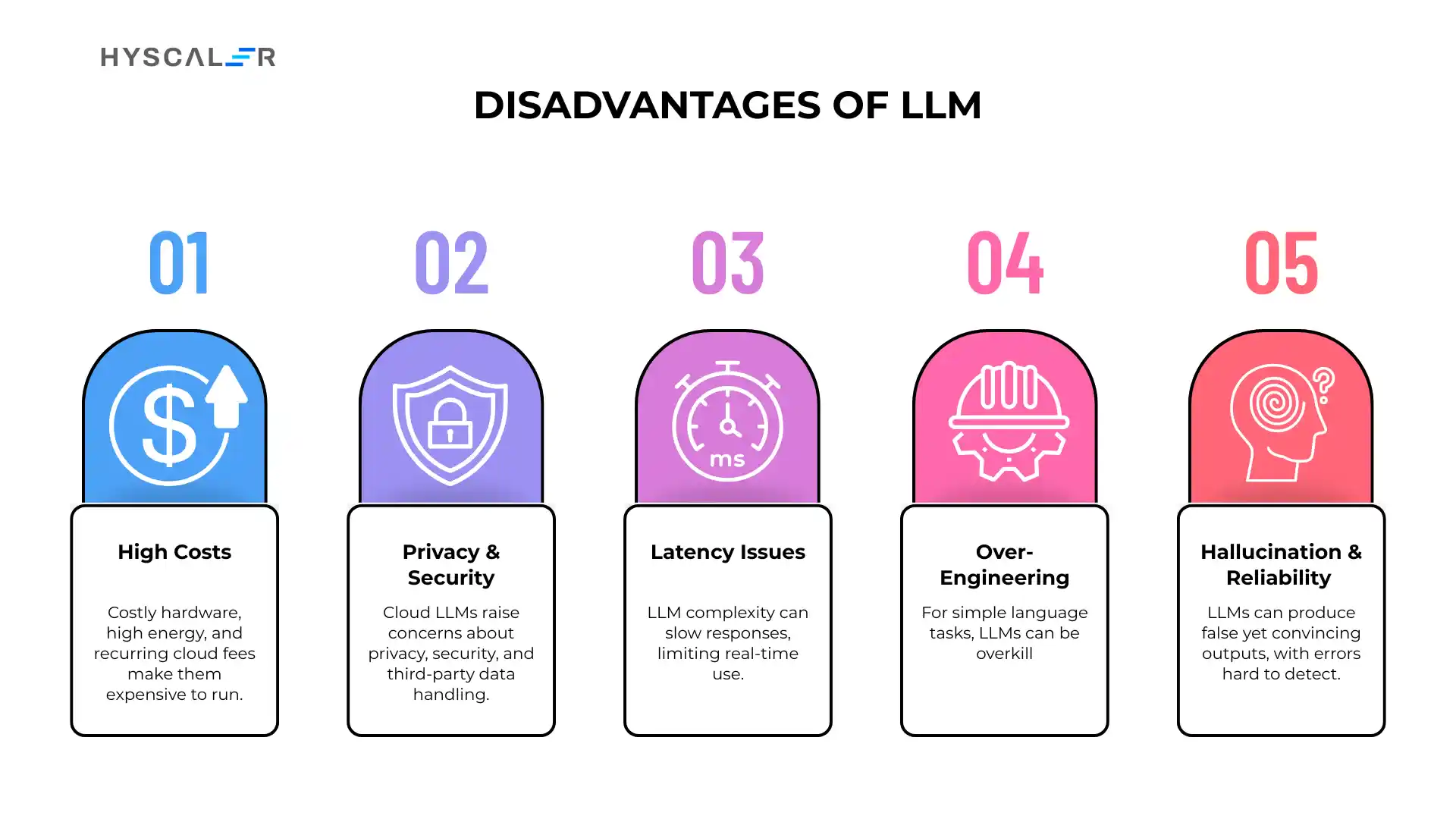

Disadvantages of Large Language Models (LLMs)

High Computational Costs

The resource requirements for LLMs translate directly into high operational costs.

Running these models requires expensive hardware, significant energy consumption, and often ongoing subscription fees for cloud-based services.

Privacy and Security Concerns

Many LLMs operate through cloud services, which can raise concerns about data privacy, security, and the potential for sensitive information to be processed by third-party services, as data leaks are possible.

Latency Issues

The complexity of LLMs can result in slower response times, particularly for complex queries or when dealing with high user loads.

This latency can be problematic for real-time applications or for time-sensitive operations.

Over-Engineering for Simple Tasks

For straightforward language processing tasks, LLMs may represent unnecessary complexity and resource usage, similar to using a supercomputer for basic calculations.

Hallucination and Reliability Issues

LLMs can sometimes generate convincing but incorrect information, and their complexity can make it difficult to predict or control their behavior in all situations.

Which data was hallucinated cannot be determined if you don’t know anything about the topic.

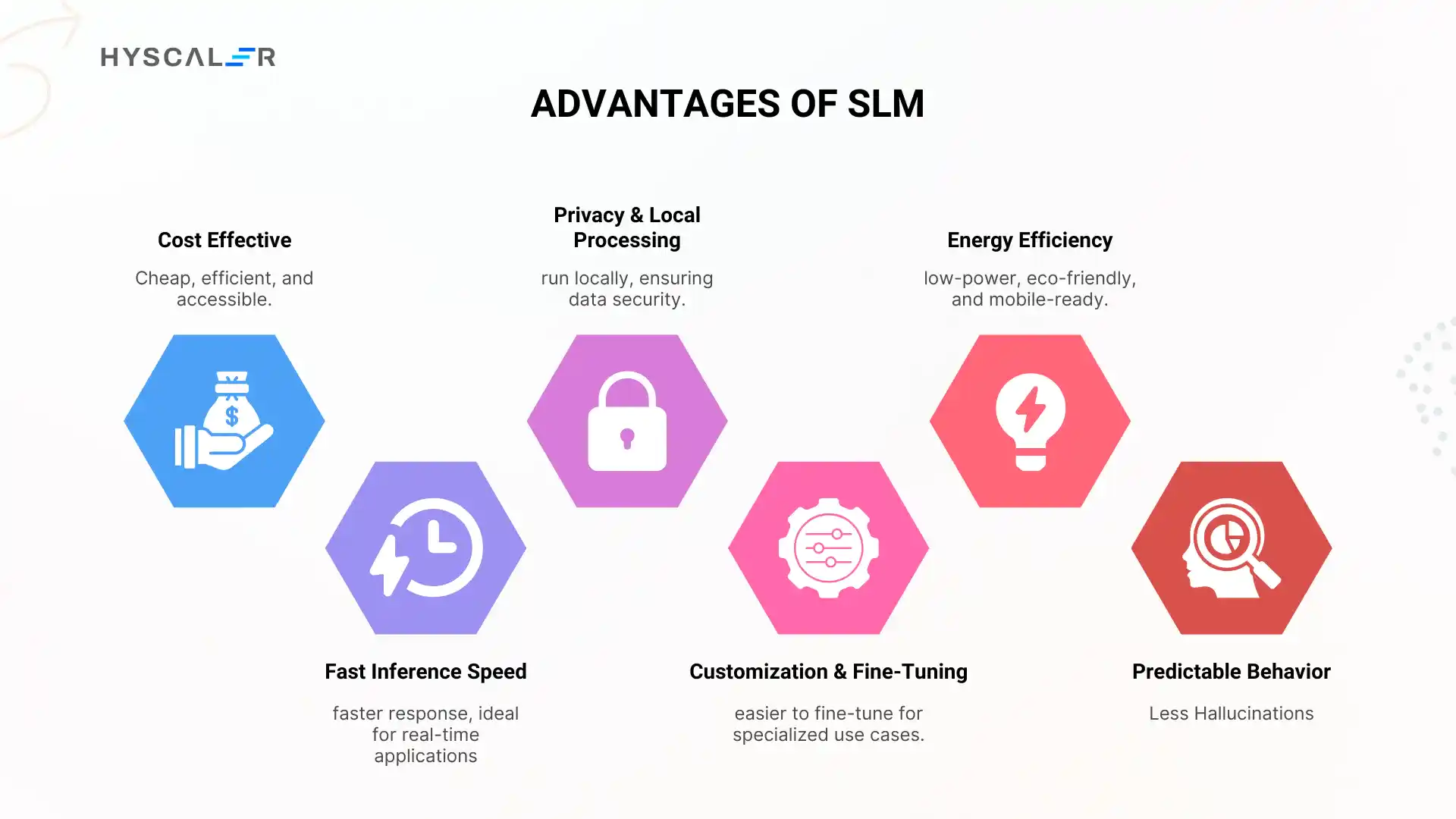

Advantages of Small Language Models (SLMs)

Cost Effectiveness

SLMs offer significant cost advantages through lower hardware requirements, reduced energy consumption, and the ability to run locally without ongoing service fees.

This makes them accessible to smaller organizations and individual developers who have a shortage of funding.

Fast Inference Speed

The reduced complexity of SLMs typically results in faster response times, making them suitable for applications requiring real-time or near-real-time processing.

Privacy and Local Processing

SLMs can operate entirely locally, ensuring that sensitive data never leaves the user’s control.

This is particularly important for applications dealing with confidential information or in regulated industries where data leaks could cause serious havoc.

Customization and Fine-Tuning

The smaller size of SLMs makes them more practical to fine-tune for specific applications, allowing organizations to create highly specialized models tailored to their particular needs and domains.

Energy Efficiency

SLMs consume significantly less power, making them environmentally friendly and practical for mobile or battery-powered applications.

Predictable Behavior

The reduced complexity of SLMs often makes their behavior more predictable and easier to understand, which can be important for applications requiring consistent and reliable outputs that cause less or no hallucination.

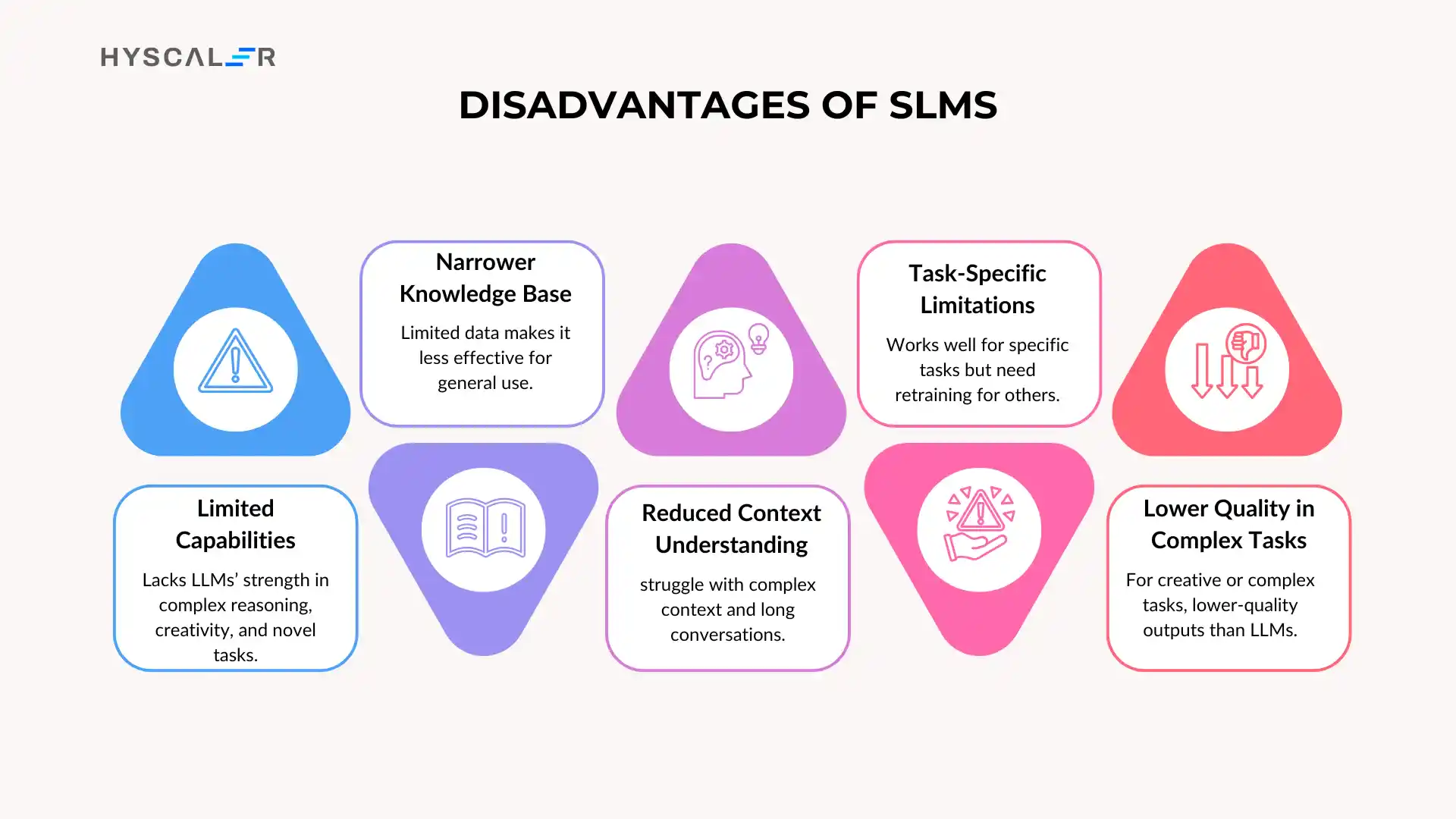

Disadvantages of Small Language Models (SLMs)

Limited Capabilities

SLMs cannot match the comprehensive capabilities of LLMs, particularly for complex reasoning tasks, creative generation, or handling novel situations that require extensive background knowledge.

Narrower Knowledge Base

The reduced training data and model size result in more limited knowledge across domains, potentially making SLMs less effective for general-purpose applications.

Reduced Context Understanding

SLMs may find it challenging to understand complex contexts, engage in long discussions, or perform tasks that require deep subject knowledge.

Task-Specific Limitations

While SLMs can be highly effective for specific tasks, they may require separate models or extensive retraining to handle different types of language processing challenges.

Lower Quality in Complex Tasks

For sophisticated language generation, creative writing, or complex problem-solving, SLMs typically produce lower-quality outputs compared to their larger counterparts.

Use Cases and Applications

LLM Applications

Large Language Models excel in applications requiring sophisticated language understanding and generation.

Content creation platforms leverage LLMs for article writing, marketing copy, and creative content generation.

Customer service systems use LLMs to handle complex inquiries that require understanding context and providing detailed, helpful responses.

Educational applications benefit from LLMs’ ability to explain complex concepts, provide tutoring across multiple subjects, and adapt their communication style to different learning levels, from children to adults.

Software development tools powered by LLMs can generate code, debug programs, and provide technical documentation across various programming languages and frameworks.

Research and analysis applications utilize LLMs for literature reviews, data interpretation, and generating insights from complex datasets, making them easily understandable and readable.

Legal and professional services employ LLMs for document analysis, contract review, and generating professional communications.

SLM Applications

Small Language Models find their strength in focused, domain-specific applications where efficiency and speed are paramount.

Mobile applications often integrate SLMs for real-time text processing, translation, and voice recognition without requiring internet connectivity.

Edge computing devices use SLMs for local language processing in IoT applications, smart home systems, and industrial automation, where low latency and offline capability are required.

Specialized chatbots designed for specific customer service scenarios can effectively use SLMs to handle routine inquiries quickly and efficiently.

Email clients and productivity applications employ SLMs for spam detection, sentiment analysis, and automated categorization.

Healthcare applications use specialized SLMs for medical text processing while maintaining strict privacy requirements through local processing.

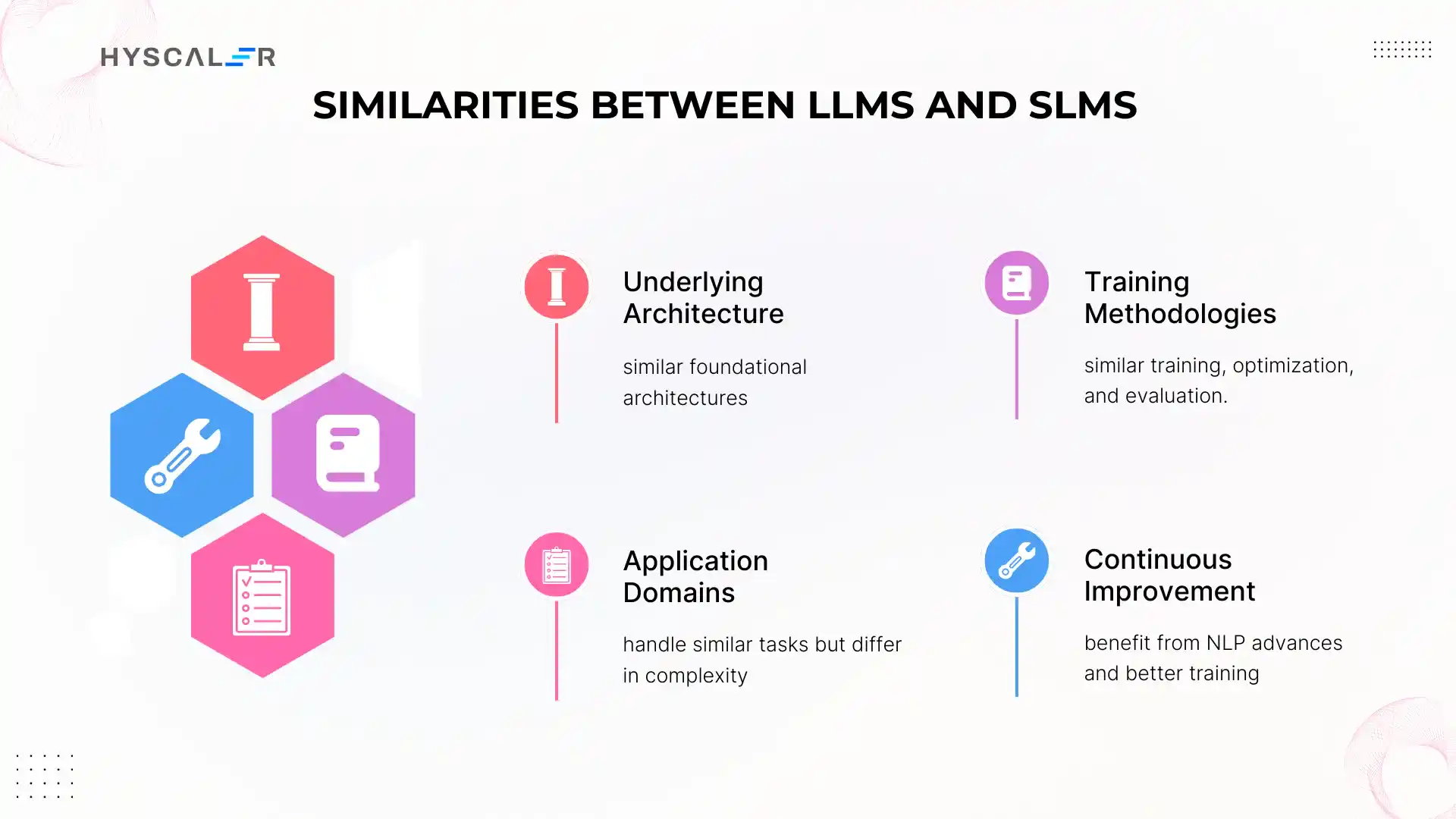

Similarities Between LLMs and SLMs

Underlying Architecture

Both LLMs and SLMs typically share similar foundational architectures, most commonly based on transformer models.

They use attention mechanisms, encoder-decoder structures, and similar mathematical principles for processing and generating language.

Training Methodologies

Both types of models are trained in almost the same way as they learn from large collections of text using supervised learning, use similar methods to improve their performance, and are tested with comparable evaluation techniques.

Application Domains

Both LLMs and SLMs are applied to similar categories of language processing tasks, including text generation, classification, translation, and question-answering, though they may differ in the complexity of tasks they can effectively handle.

Continuous Improvement

Both model types benefit from ongoing research in natural language processing, improvements in training techniques, and advances in model architecture that enhance performance and efficiency.

Choosing Between LLMs and SLMs

Assessment Criteria

The decision between LLMs and SLMs should be based on a careful evaluation of several key factors.

Task complexity is perhaps the most important consideration.

Applications requiring sophisticated reasoning, creative generation, or handling of novel situations typically benefit from LLMs, while well-defined, specific tasks may be easily handled by SLMs.

Resource availability plays a crucial role in the decision process.

Organizations with limited computational resources, strict budget constraints, or requirements for local processing may find SLMs more practical despite their limitations.

Performance requirements must be carefully evaluated.

Applications requiring the highest quality outputs and maximum capability should consider LLMs, while those prioritizing speed, efficiency, and cost-effectiveness may choose SLMs, as they benefit more from it.

Privacy and security requirements can strongly influence the choice.

Applications handling sensitive data or operating in regulated industries may require the local processing capabilities that SLMs can provide.

Decision Framework

A structured approach to choosing between LLMs and SLMs involves evaluating the specific requirements of your application, available resources, and long-term strategic goals.

Consider starting with a clear definition of success metrics for your application, including quality thresholds, performance requirements, and cost constraints.

Prototype and test both approaches where possible, as the theoretical advantages of either model type may not translate directly to your specific use case.

Consider hybrid approaches where appropriate, such as using SLMs for initial processing and LLMs for complex cases that require more sophisticated handling, which would provide more flexibility.

Future Trends and Developments

Convergence and Innovation

The field of language models is changing or evolving at a rapid pace, with ongoing research aimed at bridging the gap between LLMs and SLMs.

Techniques like knowledge distillation, model compression, and architectural innovations are making it possible to achieve LLM-like capabilities in more compact models.

Emerging approaches such as mixture of experts models, sparse activation techniques, and dynamic model sizing are creating new categories of models that combine the efficiency advantages of SLMs with the capability advantages of LLMs.

Democratization of AI

The development of more efficient models and improved tooling is making advanced language processing capabilities accessible to a broader range of users and applications.

This trend is likely to continue, with SLMs playing a crucial role in bringing AI capabilities to edge devices, mobile applications, and resource-constrained environments.

Conclusion

The choice between Large Language Models and Small Language Models ultimately depends on the specific requirements, constraints, and goals of your application.

LLMs offer unparalleled capability and versatility but require significant resources and may introduce complexity and cost that are unnecessary for simpler applications.

SLMs provide efficient, practical solutions for focused applications while maintaining accessibility and cost-effectiveness.

As the field continues to evolve, the distinction between LLMs and SLMs may become less rigid, with new approaches bridging the gap between efficiency and capability.

The key to success lies in understanding your specific needs, evaluating both options objectively, and choosing the approach that best aligns with your technical requirements, resource constraints, and strategic objectives.

Rather than viewing LLMs and SLMs as competing technologies, consider them as complementary tools in the broader AI toolkit, each with distinct advantages that make them suitable for different aspects of the language processing challenge.

The future likely holds a diverse ecosystem of models ranging from highly specialized SLMs to general-purpose LLMs, with the most successful implementations leveraging the strengths of each approach where they provide the greatest value.

READ MORE:

The Strategic AI Roadmap for Medium Enterprises: Why Moving Early Makes All the Difference

AIOps: The Smart Engine Behind Modern ITOps

FAQs

Q1. What is SLM in AI?

SLMs are computational models that generate and respond to natural language. They are trained to perform specific tasks that require fewer resources than LLMs

Q2. Are SLMs faster than LLMs?

SLMs outperform LLMs in terms of speed. They don’t handle large data like LLMs and are very quick to respond to specific queries they are trained for.

Q3. Is SLM more appropriate than LLM?

SLM, in some cases, is more appropriate than LLM, such as for a less complex task, where resources are low, and where speed and privacy are essential.

Q4. Is ChatGPT a large language model?

Yes, ChatGPT is a part of the LLM family as it requires a large database, requires more energy, and a big infrastructure.

Q5. What is the difference between LLM and ML?

ML is the broad field in AI where systems learn from data and improve over time without being explicitly programmed.

LLM is one tool from that box specialized in language tasks, built using ML techniques.

Q6. What is reasoning model

A reasoning model is essentially a fine-tuned LLM designed for complex questions. These models break down intricate questions into smaller parts before providing their answers.