Table of Contents

Google has recently launched Gemini Pro, a powerful and flexible AI model that can perform a wide range of tasks such as summarizing texts, answering questions, and generating content. Google Gemini Pro is part of Gemini, Google’s “largest and most capable AI model” that comes in three sizes: Ultra, Pro, and Nano.

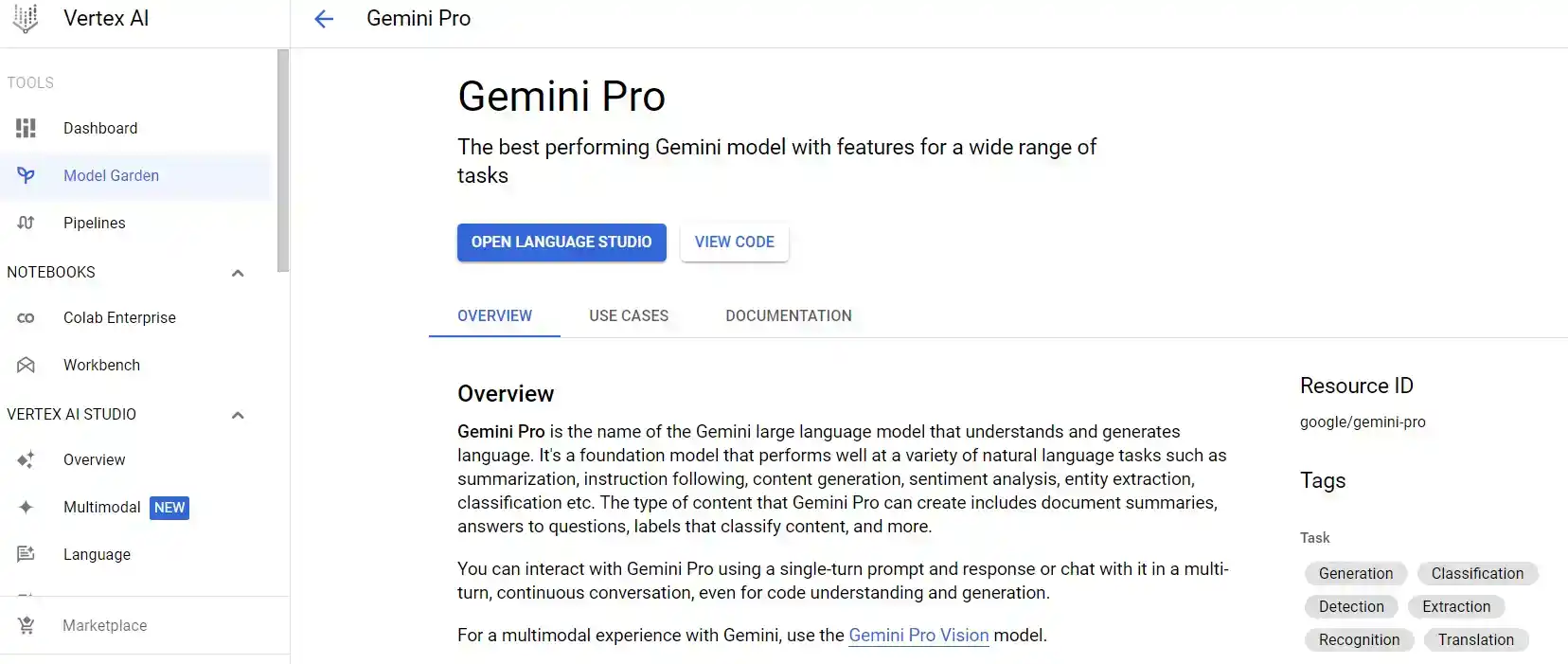

Google Gemini Pro is now accessible on Vertex AI, Google’s “end-to-end AI platform” that offers easy-to-use tools, fully managed infrastructure, and built-in privacy and safety features.

What can Google Gemini Pro do?

Gemini Pro is a scalable and general-purpose AI model that can handle various types of information across text, code, images, and video. Some of the use cases for Gemini Pro are:

- Summarization: Gemini Pro can create a shorter version of a document that retains the relevant information from the original text. For example, it can summarize a chapter from a textbook or create a product description from a longer text.

- Question answering: Gemini Pro can provide answers to questions in text. For example, it can automate the creation of a Frequently Asked Questions (FAQ) document from knowledge base content.

- Classification: Gemini Pro can assign a label describing the provided text. For example, it can apply labels that indicate whether a block of text is grammatically correct or not.

- Sentiment analysis: Google Gemini Pro can identify the sentiment of text and turn it into a label that is applied to the text. For example, the sentiment of the text might be positive or negative, or anger or happiness.

- Entity extraction: Gemini Pro can extract a piece of information from text. For example, it can extract the name of a movie from the text of an article.

- Content creation: Gemini Pro can generate texts by specifying a set of requirements and backgrounds. For example, it can draft an email under a given context using a certain tone.

How can developers use Google Gemini Pro?

Gemini Pro is available on Vertex AI, a platform that allows developers to customize and deploy AI models with ease and efficiency. According to Google exec Burak Gokturk, developers can:

- Discover and use Gemini Pro, or choose from a curated list of more than 130 models from Google, open-source, and third parties that meet Google’s strict enterprise safety and quality standards.

- Model behavior can be tailored to specific domains or company expertise by using tuning tools to supplement training knowledge and even adjusting model weights as needed.

- Augment models with tools to help adapt Google Gemini Pro to specific contexts or use cases.

- Manage and scale models in production using purpose-built tools to help ensure that applications can be easily deployed and maintained once they are built.

- Create search and conversational agents in a no-code environment.

- Deliver responsible innovation by utilizing Vertex AI’s safety filters, content moderation APIs, and other responsible AI tooling to assist developers in ensuring that their models do not output inappropriate content.

- Google Cloud’s built-in data governance and privacy controls can help protect data.

One of the specific use cases that Gokturk mentioned is the ability to build ‘agents’ that can process and act on information using Gemini Pro.

What is the indemnity and availability of Gemini Pro?

Google is providing indemnity for generated model outputs, including model outputs from PaLM 2 and Vertex AI Imagen, in addition to indemnity for claims relating to the company’s use of training data. The indemnity coverage for Gemini Pro is planned to be available when the Gemini API becomes generally available. The Gemini API is now available through the Vertex AI console, where the web-based Vertex AI Studio assists developers in quickly creating prompts to generate useful responses from the available large language models (LLMs).

While Gemini Pro is already accessible, developers will have to wait for the Ultra model, which is designed for highly complex tasks. Gokturk said that Gemini Ultra will be available to select customers, developers, partners, and safety and responsibility experts for early experimentation and feedback before rolling it out to developers and enterprise customers early next year.