Table of Contents

Stretchable E-Skin: Researchers at The University of Texas at Austin have developed a new kind of electronic skin (e-skin) that can maintain its ability to sense pressure even when stretched. This is a significant breakthrough because previous e-skin technologies would lose accuracy as the material stretched.

This new Stretchable E-Skin is designed to mimic human skin, which needs to stretch and bend without sacrificing its sensitivity to touch. The researchers believe this technology has the potential to revolutionize how robots interact with their surroundings.

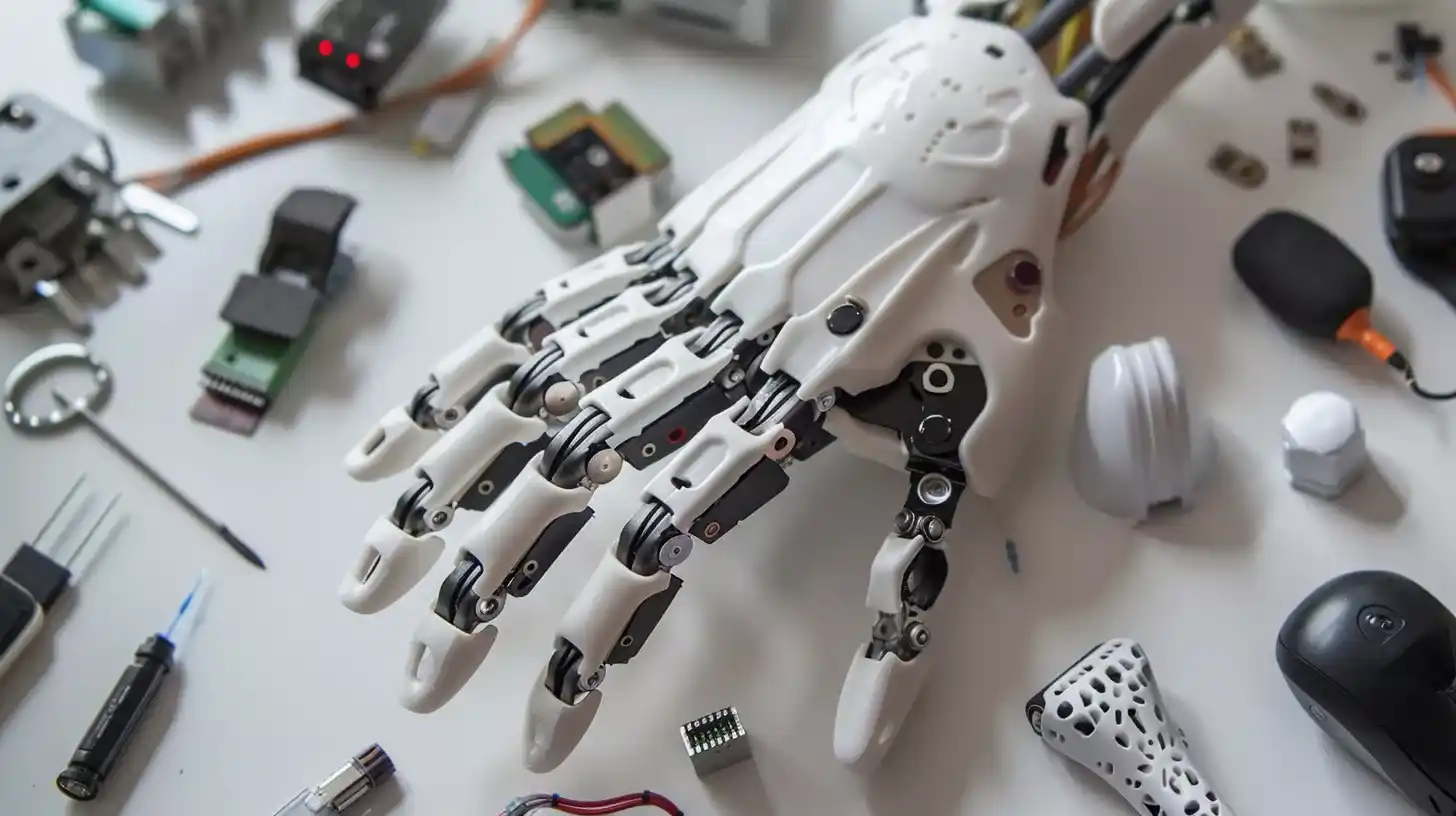

One of the major applications envisioned for this Stretchable E-Skin is in robotics, specifically for creating robot hands with a human-like sense of touch. This could be extremely beneficial in the medical field, where robots could be used for delicate tasks such as checking a patient’s pulse or providing massages. With an aging population placing a strain on medical resources, robots equipped with this Stretchable E-Skin could serve as helpful nurses or therapists.

The development of this Stretchable E-Skin is a major step forward in the field of robotics and has the potential to improve how machines interact with the world around them.

Do Robots Have Senses Like Humans If Using Stretchable E-Skin Paves?

While robots don’t possess biological senses like us, they can perceive their environment through a variety of sensors that mimic human senses to varying degrees. Here’s a breakdown:

- Vision: Cameras are a robot’s primary “eyes.” They capture visual data and send it to processing units that interpret shapes, colors, and movement. Unlike human eyes limited to the visible spectrum, robots can have infrared or ultraviolet cameras for broader environmental detection.

- Hearing: Microphones allow robots to detect and analyze sounds. Advanced algorithms can then distinguish specific sounds, like voices or alarms.

- Touch: Pressure sensors embedded in a robot’s grippers or “skin” can detect contact and force. This is crucial for tasks requiring delicate handling or preventing collisions. The new stretchable e-skin mentioned earlier pushes the boundaries of touch sensitivity in robots.

- Taste and Smell: These are the least developed robotic senses. Some robots can use chemical sensors to identify basic gas compositions, but they can’t replicate the full spectrum of taste and smell humans experience.

Do Robots Have Sensitivity?

Sensitivity in robots refers to their ability to perceive subtle changes in their environment and react accordingly. Here’s how it works:

- Sensor Resolution: High-resolution sensors provide more data for the processing unit, allowing for finer distinctions. For example, a high-resolution camera can identify smaller objects or details in an image.

- Processing Power: The processing unit’s ability to analyze sensor data determines the robot’s sensitivity. Powerful algorithms can interpret complex sensory information and enable the robot to react appropriately.

- Feedback Loops: Advanced robots have feedback loops where sensor data is used to adjust actions. For instance, a robotic arm equipped with pressure sensors can adjust its grip strength based on the object it’s holding.

Current robots achieve varying levels of sensitivity depending on their purpose. Industrial robots may focus on high precision in repetitive tasks, while robots designed for human interaction may prioritize responsiveness to touch or voice commands.

How Does a Robot Simulate a Sense of Touch?

Robots primarily rely on tactile sensors to simulate touch through Stretchable E-Skin. These sensors can be:

- Pressure Sensors: These measure the amount of force applied to the robot’s body.

- Strain Sensors: These detect deformation or stretching in the robot’s Stretchable E-Skin.

- Conductive Sensors: These measure changes in electrical conductivity when the robot comes in contact with an object.

By combining data from various tactile sensors, the robot’s processing unit can create a rudimentary understanding of the object’s shape, texture, and temperature. The new stretchable e-skin is a promising development as it allows for a more nuanced and human-like sense of touch.

How Can a Robot Sense Its Environment Through Stretchable E-Skin Paves?

Robots use a combination of sensors to build a comprehensive picture of their surroundings. Here are some key players:

- LiDAR (Light Detection and Ranging): This sensor emits pulses of light and measures the reflected light to create a 3D map of the environment.

- Sonar (SOund Navigation And Ranging): Similar to LiDAR, sonar uses sound waves to map an environment, particularly useful underwater or in low-light conditions.

- Proprioception Sensors: These internal sensors track the robot’s own movements and joint positions, allowing it to maintain balance and coordinate its actions.

The data from these sensors is fused and interpreted by the robot’s control system, enabling it to navigate obstacles, manipulate objects, and interact with its surroundings safely and efficiently.

Can We Make Robots That Look Like Humans?

Building robots with human-like appearances has been a longstanding goal in robotics and science fiction. Significant progress has been made in creating robots with realistic-looking skin, hair, and facial features. However, achieving true human likeness remains a challenge.

Here are some hurdles:

- Complexity of the Human Face: The human face has a vast array of subtle details and expressions that are difficult to replicate perfectly in robots.

- Non-verbal Communication: Humans convey a lot of information through facial expressions, body language, and micro-movements. These subtleties are challenging to recreate in robots with current technology.

- The Uncanny Valley: This psychological phenomenon describes our discomfort with robots that appear almost, but not quite, human.

Despite these challenges, researchers are constantly pushing the boundaries of robotic appearance. We may see robots in the future that are indistinguishable from humans in appearance, but replicating the full range of human expression and movement may require significant breakthroughs in materials science and artificial intelligence.

What Makes a Robot Human-Like?

There’s no single factor that defines a human-like robot. It’s a combination of capabilities:

- Physical Dexterity: The ability to move and manipulate objects with a high degree of precision and control, mimicking human hand-eye coordination.

- Cognitive Abilities: Advanced artificial intelligence that allows the robot to learn, adapt, and solve problems in dynamic environments. This includes skills like decision-making, planning, and understanding complex instructions.

- Social Intelligence: The ability to interact with humans in a natural way, including understanding emotions, responding to social cues, and engaging in meaningful conversation.

Beyond these core functionalities, human-like robots may also possess:

- Biomimetic Design: Robots with bodies inspired by human anatomy, not just appearance, can move with more natural flexibility and grace.

- Emotional Intelligence: The ability to recognize and respond to human emotions, although this is a highly complex area of AI research.

The development of human-like robots raises many ethical and philosophical questions. While they hold immense potential for various fields, concerns exist about job displacement, the potential for misuse, and the blurred line between human and machine.

As robotic technology continues to evolve, the definition of “human-like” will likely change. The ultimate goal may not be to create perfect replicas of humans, but rather intelligent machines that can work alongside us, complementing our strengths and augmenting our capabilities.

Conclusion:

The development of advanced robotic senses like the new stretchable e-skin opens exciting possibilities for the future. Robots with more human-like perception will be able to interact with the world in richer and more nuanced ways. This could revolutionize fields like healthcare, manufacturing, and exploration.

However, the path to robots that are truly human-like is complex. We need not only advancements in sensory technology but also breakthroughs in artificial intelligence, social interaction, and even our understanding of what it means to be human. As we move forward, careful consideration needs to be given to the ethical implications of increasingly sophisticated robots.

The goal should be to create machines that complement human abilities, not replace them, and to ensure that these advancements benefit all of society.