Table of Contents

Artificial Emotional Intelligence (AEI) is changing the concept of how we interact with technology, by allowing machines to recognize, decode, and reply to human feelings. Although conventional AI has driven the revolution of businesses through automation and optimization, AEI adds a new layer of emotional awareness, respectively, to develop more empathetic and human-like user conversations.

Across the board from better patient care to better customer relations and more effective learning environments, AEI is proving to be full of opportunity across an array of fields. It is no longer the case that machines have only to perform tasks—the machines now have to relate to us emotionally.

Ready to explore how Artificial Emotional Intelligence can transform the industry? Let’s dive deeper into the future of human-centered technology!

What Is Artificial Emotional Intelligence?

Artificial Emotional Intelligence, or AEI, is an AI system that is capable of all stages of emotion analysis and comprehension. Through development in areas of natural language processing (NLP), facial recognition, voice analysis, and physiological data tracking, AEI is capable of interpreting emotional content with stunning precision. As an example, next-generation systems are capable of identifying stress from a person’s voice and can use facial microexpressions to understand emotions such as happiness, anger, or sadness.

Gartner research has forecasted that by 2026, AI-enhanced emotional intelligence will be embedded in 25% of all customer service interactions, changing the customer-to-business relationship. AEI is not just a reader of affect; it employs this knowledge to adapt interactions, thus providing a more personalized as well as more empathetic interaction.

How Does AEI Work?

Artificial Emotional Intelligence (AEI) is a confluence of affective computing that allows machines to detect, analyze, and react to human emotions. It uses advanced algorithms, machine learning, and natural language processing to analyze emotional cues like vocal tone, facial expressions, body language, and even physiological signals such as heart rate or skin conductivity. When emotional signals are detected by AEI systems, it is possible to analyze an individual’s emotional state in real-time.

This technology allows machines to adapt their responses accordingly. In a variety of examples, however, AI-based systems (such as speech, systems, or ambient sound systems) may alter their voice depending on a user’s level of frustration, empathize, or identify signs of stress to customize their tone or behavior. By integrating emotional awareness, AEI leads to more natural, compassionate, and efficient human-machine interactions.

Emotional Intelligence in Action: Real-World Applications of AEI

Telemedicine Platforms: Artificial Emotional Intelligence is making its mark in improving the quality of patient care, particularly in telemedicine. For example, platforms like Empathy utilize AEI to detect emotional cues from patients’ voices during video consultations. Using tone, pitch, and speech pattern, the system diagnoses feelings of anxiety, depression, or stress.

Research by Sheng et al. (2020) illustrates that the early identification of these affective cues can result in a more customized treatment and improved mental health treatment. Healthcare professionals may modify the clinical trajectory, respond with empathetic concerns, and implement acute interventions, which in the end enhance patient satisfaction and treatment success.

Customer Service: Companies such as Cogito have embedded Artificial Emotional Intelligence in call center environments to improve customer experience. AEI systems measure vocal tone and speech characteristics in an attempt to learn the emotional state of a customer. When a customer shows frustration or dissatisfaction the system notifies the agent in real time with suggestions on how to modify the response to have a more pleasant exchange.

According to a study published in Harvard Business Review (2021), customer service by intelligent emotion can increase satisfaction rates by up to 20%, which subsequently translates to improved customer retention and loyalty.

In-Car Assistants: Automotive manufacturers such as Nissan have been implementing AEI in their in-car assistance. Through analysis of a driver’s vocal stress, intonation, and speed of speech, AEI systems may be able to identify emotional states, such as irritation, boredom, or anxiety.

Research by Tateishi et al. (2022) shows how these affective clues can be exploited to achieve a safer and more pleasant driving experience. The system modifies in-cabin environmental factors–like playing calming music or suggesting alternative routes–identifying stress, thus ensuring a safer, more pleasant driving experience.

Education Platforms: In education, AEI tools such as Affectiva are helping educators better understand student engagement. Using facial expressions and body language, AEI systems are capable of realizing when students are disengaged or confused. This kind of information in real-time allows for instructional methodology adjustments, provision of extra assistance, or the modification of the lesson speed.

According to a study by Rajendran et al. (2021) By introducing AEI to the classroom, students’ engagement and learning outcomes are enhanced as the educational experience is adapted to emotional needs.

Gaming: AEI is also being used in gaming to achieve ever more realistic and individualized experiences. At the same time Artificial Emotional Intelligence is used to monitor emotional responses from players in real-time, meanwhile. On the one hand, for instance, The Sims 4 can modify an unfolding narrative and the way a character behaves depending on whether an individual has a positive or negative emotional state, which is measured with the help of voice and facial recognition.

Research by Grimshaw et al. (2021) shows that incorporating emotional feedback in games increases player engagement and satisfaction, as the narrative and gameplay feel more dynamic and personalized.

Pioneers of Emotion: Early Adopters of Artificial Emotional Intelligence

Cogito: As an innovator in emotional AI, Cogito employs Artificial Emotional Intelligence in the contact center for agents and customers in customer service call center environments to enhance agent-customer relationships. Their system analyzes voice tone and speech patterns to detect emotions like frustration or satisfaction, helping agents adjust their responses in real-time. Because of this early adoption, customer satisfaction and agent performance have improved.

Affectiva: Affectiva is one of the pioneers in the emotional AI era, whose work is related to the emotional analysis of facial expression and voice analysis for emotion assessment in diverse applications such as the field of education, automotive, and media. The technology is employed in education to track student learning engagement, and in vehicles to detect driver mood to enhance safety and comfort.

Nissan: In the automotive industry, at Nissan, AEI has been implemented in their vehicles to improve driver safety and comfort. Their system tracks the driver’s emotional state using vocal tone and speech waveform and dynamically switches the in-car environment such as music and lighting to enhance the driver experience, especially under stressful conditions.

Spotify: Spotify has started incorporating Artificial Emotional Intelligence in their music recommendation algorithms. By analyzing a user’s mood and emotional state through listening patterns, voice tone (in certain features like podcasts or voice searches), and other behavioral cues, Spotify personalizes music recommendations to suit the listener’s current emotional state, enhancing the overall user experience.

Challenges and Ethical Considerations

Despite its potential, Artificial Emotional Intelligence raises ethical questions. How do we guarantee responsible use of the data collected? Can we believe that machines can read emotions reliably, without any bias? These issues highlight the need for the development of an ethical framework for AEI. Businesses need to focus on transparency, have clear and robust data privacy rules, and continuously evaluate the ethical lifecycle of their technologies to reduce the risk of harm.

An interesting case study is presented on how Microsoft’s Xiaoice, a character with a high level of emotional intelligence, played a huge number of conversations (600 million+ with a 90% rate of customer satisfaction. But it also highlighted the importance of limits to prevent emotional attachment to technology.

Although Artificial Emotional Intelligence (AEI) is capable of creating stronger ties, this is only to be achieved through a proper balance between AEI implementation and human engagement, to avoid technology filling the place of real emotional ties. Looking ahead, developers need to make sure that AEI tools are a support to human empathy but not a replacement for it.

The Road Ahead

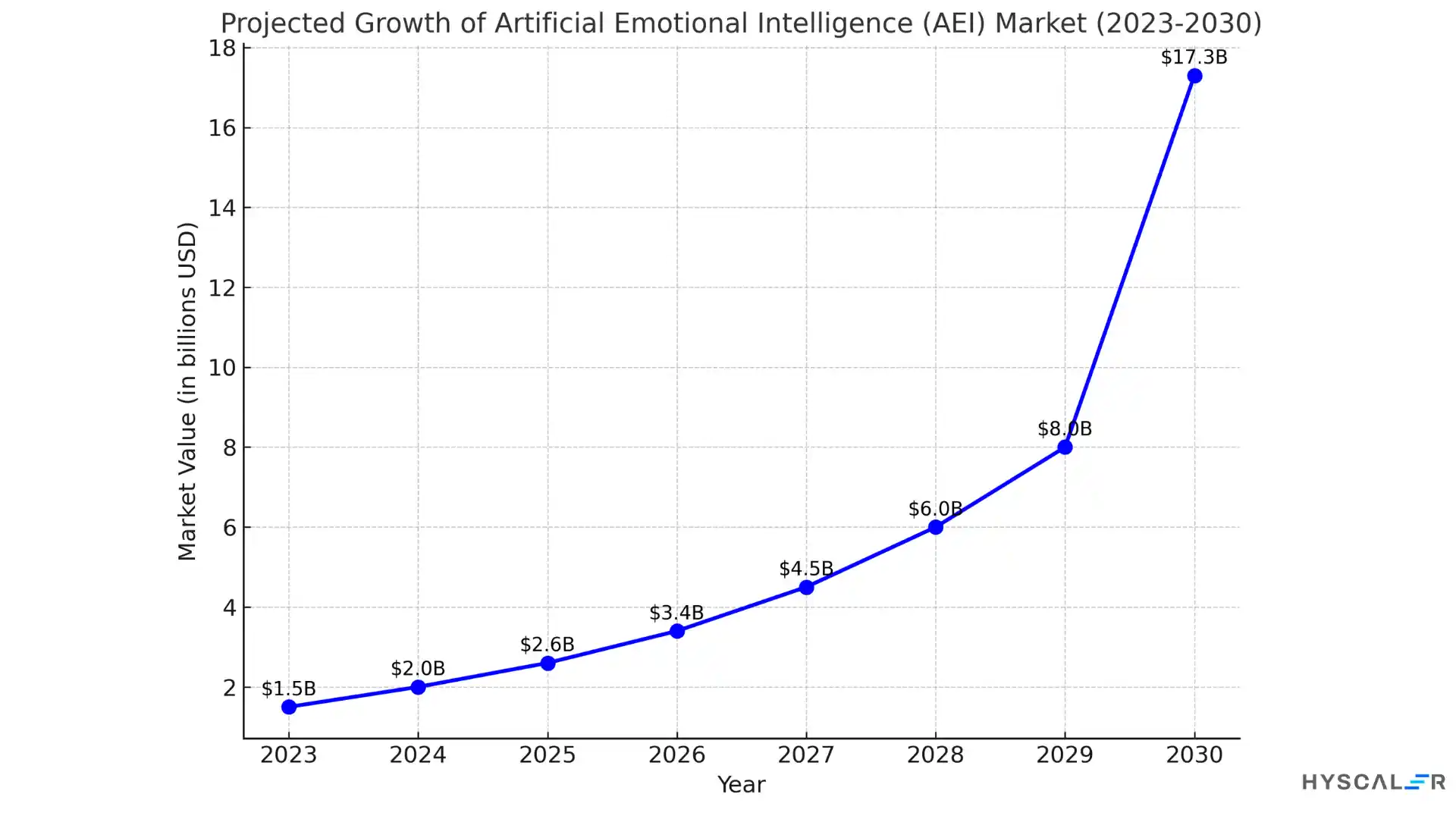

Artificial Emotional Intelligence (AEI) is still in the infancy stage but shows an exponential pace of development. Despite the global AEI market being estimated to expand at a compound annual growth rate (CAGR) of 25% with a treatment valuation of $173 billion (2030) based on Market Research Future, the outlook for AEI is highly encouraging.

This growth is illustrative of a critical change in the environment towards a technology that is more human-like, not only understanding and adapting to our emotional needs.

In the drowning future of AI, AEI presents a vital signal about the need for empathy in our digital society. By embedding emotional intelligence into machines, we’re not just creating smarter technology—we’re building systems that can connect with us on a deeply human level. This has exciting potential applications across sectors, from healthcare to call centers, education, and all the rest. With the increasing age of AEI, we can look forward to a more profound and empathetic interaction with the technologies influencing our lives.

Looking ahead, Artificial Emotional Intelligence has the potential to revolutionize how we interact with technology providing a more emotionally intuitive experience to our digital world. The road to an emotionally intelligent future is still just starting, and the journey ahead is as likely to be dramatic as it is rewarding.

READ MORE:

Gamification in Software Development: Thriving Teams Empowered Together

Vector Database Architecture: The Backbone of AI Search Evolution