Table of Contents

Microsoft has confirmed the rumors that it has developed its own custom AI chip and CPU for its Azure cloud services. The company says that these chips will enable it to train large language models and run cloud AI workloads more efficiently and cost-effectively. The chips will also help Microsoft and its enterprise customers prepare for a future that is driven by AI.

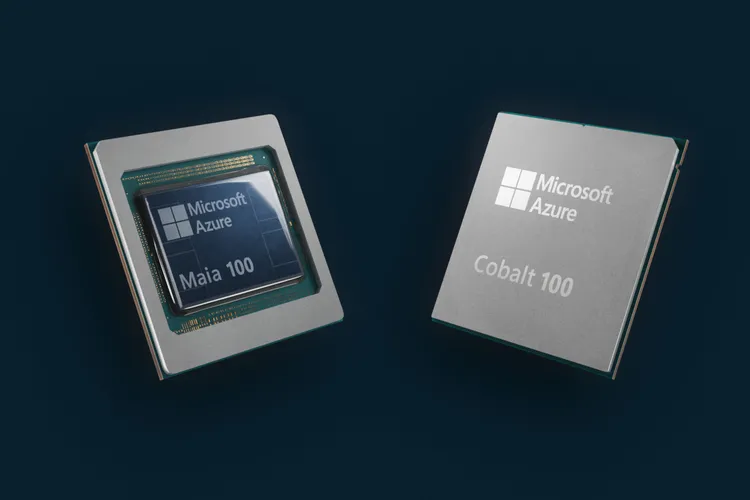

Microsoft custom AI chip: Maia 100

The Microsoft custom AI chip is called Maia 100, named after a bright blue star. It is an AI accelerator that is designed to run cloud AI workloads, such as large language model training and inference. Maia 100 will power some of the company’s biggest AI projects on Azure, including its partnership with OpenAI, where Microsoft provides the computing resources for all of OpenAI’s workloads. Microsoft has been working closely with OpenAI to design and test Maia 100 with its models.

“Microsoft first shared their designs for the Maia chip, and we’ve worked together to refine and test it with our models,” says Sam Altman, CEO of OpenAI. “Azure’s end-to-end AI architecture, now optimized down to the silicon with Maia, paves the way for training more capable models and making those models cheaper for our customers.”

Maia 100 custom AI chip is manufactured on a 5-nanometer TSMC process and has 105 billion transistors, which is about 30 percent less than the 153 billion transistors on AMD’s MI300X AI GPU, which competes with Nvidia’s GPUs.

Maia 100 supports Microsoft’s first implementation of the sub 8-bit data types, MX data types, which are a new standard for AI models that Microsoft is co-developing with AMD, Arm, Intel, Meta, Nvidia, and Qualcomm. MX data types allow Maia 100 to co-design hardware and software and achieve faster model training and inference times.

Maia 100 is also the first complete liquid-cooled server processor built by Microsoft. The company has created a unique rack to house Maia 100 server boards, which includes a “sidekick” liquid chiller that acts like a car radiator or a gaming PC cooler to keep the chip’s surface temperature low. This enables Microsoft to pack more servers in its data centers without increasing the power consumption or the physical space.

Currently, Maia 100 is being tested on GPT 3.5 Turbo, the same model that powers ChatGPT, Bing AI workloads, and GitHub Copilot. Microsoft plans to make Maia 100 available to its customers in 2024. The company is not sharing the exact specifications or performance benchmarks of Maia 100 yet, but it says that it will complement its existing partnerships with Nvidia and AMD, which are still very important for its AI cloud infrastructure.

Microsoft custom CPU: Cobalt 100

The Microsoft custom AI chip is called Cobalt 100, named after the blue pigment. It is a 128-core chip that is based on an Arm Neoverse CSS design and customized for Microsoft. It is designed to power general cloud services on Azure, such as Microsoft Teams and SQL servers. Microsoft says that Cobalt 100 is highly performant and power-efficient, and it allows the company to control the performance and power consumption per core and per virtual machine. Azure virtual desktop monitoring solutions offer real-time insights into user activity, resource utilization, and performance metrics, enabling administrators to optimize virtual desktop environments for efficiency and user satisfaction.

Microsoft is currently testing Cobalt 100 on various workloads and plans to offer virtual machines to its customers next year for different applications. The company is not comparing Cobalt 100 with Amazon’s Graviton 3 servers, which are also based on Arm and available on AWS, but it claims that Cobalt 100 is up to 40 percent better than the commercial Arm servers that it is currently using for Azure. Microsoft is not sharing the full system specifications or benchmarks of Cobalt 100 yet.

Both Maia 100 and Cobalt 100 are part of Microsoft’s effort to rethink its cloud infrastructure for the era of AI and optimize every layer of its hardware and software stack. The company says that it has a long history in silicon development, dating back to its collaboration on Xbox and Surface chips and that it started architecting its cloud hardware stack in 2017. The company also says that it is diversifying its supply chain and giving its customers more infrastructure choices by developing its own chips.

Microsoft has also hinted that Maia 100 and Cobalt 100 are not the end of its custom chip series and that it is already working on the next-generation versions of these chips. However, the company is not revealing its roadmaps for these chips yet. The company hopes that its custom chips will help it accelerate its AI ambitions and lower the cost of AI for its customers.

The company has already launched its Copilot for Microsoft 365, which is an AI-powered Office assistant that costs $30 per month per user and is limited to its biggest enterprise customers. The company is also rebranding its Bing Chat as an AI-powered conversational search engine. Maia 100 could soon help balance the demand for the AI chips that power these new features.

What is the difference between Maia 100 and Cobalt 100?

The difference between the Maia 100 and the Cobalt 100 is that they are designed for different purposes. Maia 100 is an AI accelerator that is specialized for running cloud AI workloads, such as large language model training and inference.

Cobalt 100 is a general-purpose custom AI chip that is optimized for running cloud services, such as Microsoft Teams and SQL servers.

Maia 100 is also liquid-cooled, while Cobalt 100 is not. Both chips are based on Arm architecture and customized for Microsoft.