Table of Contents

More than 100 million Llama models have been downloaded, mainly open models fueling innovation. To support trustworthy development by these creators, Meta introduces Purple Llama. It’s a project uniting tools and assessments to guide developers in responsibly using open generative AI models.

What is Purple Llama?

Purple Llama is a project with tools to help developers use AI responsibly. It offers trust and safety evaluations, aiming to make it fair for everyone to use generative AI models and experiences.

Why purple?

Meta is using an idea from cybersecurity: to handle the issues with generative AI, the team needs to both attack (red team) and defend (blue team). Purple llama involves teamwork, combining red and blue team jobs to work together and reduce possible risks by evaluating and fixing them collaboratively.

Purple Llama is launching with cybersecurity tools and safety checks. It’s just the beginning—more features are on the way soon. Purple Llama’s project parts are licensed for research and business use. Meta sees this as a big move to help developers work together and set standards for trust and safety tools in generative AI.

Cybersecurity

Meta is releasing the first-ever safety tests for Large Language Models (LLMs) in cybersecurity. Our experts have created these tests based on industry rules. Our goal is to give tools to tackle risks mentioned in the White House commitments. This release of ‘purple llama’ benchmarks aims to boost security and safety in this field.

- Metrics to measure the level of cybersecurity risk in LLM

- Methods for assessing the occurrence of insecure code recommendations

- Different tools can be utilized to assess LLMs to increase the difficulty of generating malicious code or assisting in the execution of cyber attacks.

The team is confident that these tools will decrease the occurrence of insecure AI-generated code proposed by LLMs and diminish the usefulness of LLMs to cyber adversaries.

Input and Output Safeguards

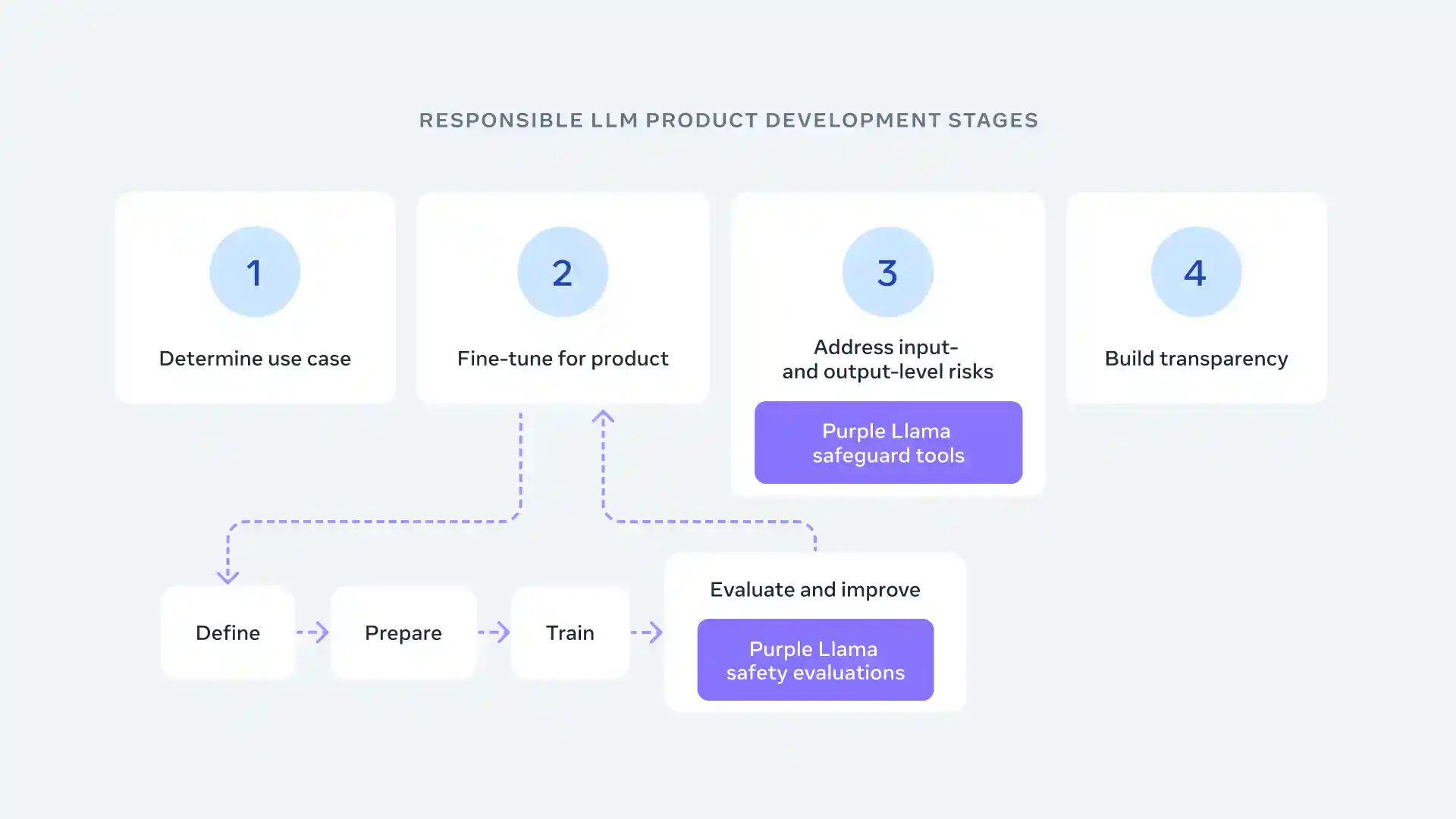

In the guide for using Purple Llama 2 responsibly, Meta advises checking and filtering everything going in and out of the system following suitable content guidelines for its purpose.

To help developers create safer content, Meta is launching Purple Llama Guard, a model to prevent risky outputs. The team is sharing their methods and findings openly to support transparent science. This model learns from public data to spot risky content. Our goal is to let developers adjust future versions for their needs, promoting better practices and a safer open environment.

Open Ecosystem

Meta has always been open about AI. They love exploring, sharing, and working together for better AI. That’s why Llama 2, launched with 100+ partners in July, brought a bunch of folks on board. Now, these same partners are teaming up with us for open trust and safety. Purple Llama brought us together with names like AMD, AWS, Google Cloud, Microsoft, and many more. The team believes in this open ecosystem, where they all collaborate to make AI safer and more reliable. It’s about working with everyone to build trust and safety in our AI world.

Conclusion

In conclusion, Purple Llama heralds a new era in AI development—a realm where innovation meets responsibility. As AI creators embrace this platform, it signifies a commitment to an AI future that not only advances technology but also upholds ethical standards.

As Purple Llama continues to be the guiding light in AI ethics, it marks a pivotal shift in the narrative of AI development. It’s not just about creating smarter AI; it’s about fostering AI that aligns with human values, making the world a better, safer place through responsible innovation.