Table of Contents

Introduction

In the rapidly evolving world of AI, we are witnessing AI systems become increasingly sophisticated, moving from simple text generators to intelligent agents capable of complex reasoning, planning, and real-world action. But here is the challenge for AI to truly impact our daily lives, it needs to interact with the world beyond generating text. It needs to send emails, query databases, control smart devices, analyze spreadsheets, and perform other tasks.

This is where tool calling protocols become crucial for building sophisticated, real-word applications. Two major protocols have emerged as leading solutions: Universal Tool Calling Protocol (UTCP) and Model Context Protocol (MCP). While both enable AI systems to interact with external tools and services.

This article will help you to understand both protocols, their strengths and trade-offs, and most importantly, which one is right for your specific needs, whether you are a developer, building your first AI application or an architect, designing enterprise-scale systems. At the same time, teams evaluating centralized architectures often compare mcp server alternatives to understand different trade-offs in control, scalability, and operational overhead.

Understanding AI Agents and Tool Calling

What Are AI Agents?

Before diving into UTCP and MCP protocols, It’s essential to understand what we mean by AI agents and how they differ from basic language models.

Traditional LLMs (Large Language Models) are essentially sophisticated text generators. You give them a prompt, they generate a response, and that’s it. They’re reactive and stateless.

AI systems that perform complex tasks autonomously are known as AI agents. They extend large language models (LLMs) with planning, memory, and tool-usage capabilities.

AI Agents, however, are autonomous systems that can:

- Plan multi-step tasks and break complex goals into actionable steps

- Maintain memory of previous interactions and context

- Make decisions based on intermediate results

- Use external tools to accomplish objectives they can’t handle alone

- Adapt their approach based on feedback and changing conditions

However, these agents require standardized methods to access external services, which is where protocols such as MCP and UTCP come into play.

Think of it this way: An AI agent is like a skilled worker, while MCP and UTCP are like different telephone systems that worker can use to call for help or information.

What is Tool Calling and Why it Matters

Tool calling enables AI agents to extend their capabilities by interacting with external systems. The process involves:

- Decision: Agent determines which tool is required.

- Invocation: Agent formats and sends a request to the tool.

- Execution: Tool processes the request.

- Response Handling: Agent parses the tool’s response and incorporates it into its reasoning.

Consider this user request: “Send a summary of this week’s sales to the team leads”

An AI agent needs to:

- Query the database to get sales data

- Analyze the data to generate insights

- Look up contact information for team leads

- Generate the summary in an appropriate format

- Send emails to the relevant people

Each step requires different tools, different authentication methods, and different data formats. This is where standardized protocols become essential.

The Core Problem: Connecting AI to the Real World

Imagine you’re building an AI assistant for your business. It needs to interact with:

- Your CRM system (Salesforce API)

- Email service (Gmail API)

- Database (PostgreSQL)

- File storage (AWS S3)

- Slack for notifications

- Analytics platform (Google Analytics)

So without standardized protocols, you’d have to write custom integration code for each service like below

# Custom integrations everywhere

class CustomIntegrations:

def get_crm_data(self, contact_id):

# Salesforce-specific authentication

sf_auth = self.authenticate_salesforce()

# Salesforce-specific API calls

return sf_auth.query(f"SELECT * FROM Contact WHERE Id='{contact_id}'")

def send_email(self, recipient, subject, body):

# Gmail-specific authentication

gmail_service = self.authenticate_gmail()

# Gmail-specific message format

message = self.create_gmail_message(recipient, subject, body)

return gmail_service.users().messages().send(userId='me', body=message).execute()

def query_database(self, sql):

# PostgreSQL-specific connection handling

conn = psycopg2.connect(self.db_connection_string)

cursor = conn.cursor()

cursor.execute(sql)

return cursor.fetchall()

def upload_file(self, filename, content):

# AWS S3-specific authentication and upload

s3_client = boto3.client('s3')

return s3_client.put_object(Bucket=self.bucket, Key=filename, Body=content)As we add more tools then this approach and codebase becomes unmanageable due to following reason:

- Code Complexity: Each tool requires custom code for authentication, data formatting, error handling, and response parsing.

- Maintenance Overhead: API changes in any service require updates to your custom integration code.

- Inconsistent Patterns: Each service has different conventions, making it hard for AI agents to learn universal patterns.

- Security Challenges: Managing credentials and permissions across multiple systems becomes a nightmare.

- Testing Difficulties: Each integration needs separate test suites and mocking strategies.

What Exactly We Need: A Universal Standards

The solution is standardized protocols that define consistent rules for:

- Discovery

- How do AI agents find available tools?

- How do tools describe their capabilities?

- What metadata is needed for proper usage?

- Communication

- What message formats should be used?

- How should requests and responses be structured?

- What transport protocols are supported?

- Authentication & Security

- How should credentials be managed?

- What security models are appropriate?

- How can we ensure secure communication?

- Error Handling

- How should errors be reported consistently?

- What retry mechanisms should be implemented?

- How can we handle partial failures gracefully?

- State Management

- How should context be maintained across multiple tool calls?

- When should sessions be stateful vs. stateless?

- How can we handle long-running operations?

This is where exactly UTCP and MCP comes in. But they both have fundamentally different approaches for these challenges.

MCP vs UTCP: Understanding Two Philosophies

Before we dive deep into each protocol, it’s important to understand the philosophical differences:

UTCP Philosophy: “Keep it simple and direct”

- Tools should be self-describing

- AI agents should communicate directly with tools

- Minimize infrastructure overhead

- Leverage existing protocols and patterns

MCP Philosophy: “Centralize and control”

- All tool interactions should go through a managed layer

- Provide enterprise-grade governance and security

- Maintain session state and context

- Offer consistent interfaces regardless of underlying tools

Both have their strengths. They are just built for different scenarios and priorities. Let’s dig into each in detail.

Universal Tool Calling Protocol (UTCP)

The Philosophy: Direct and Simple

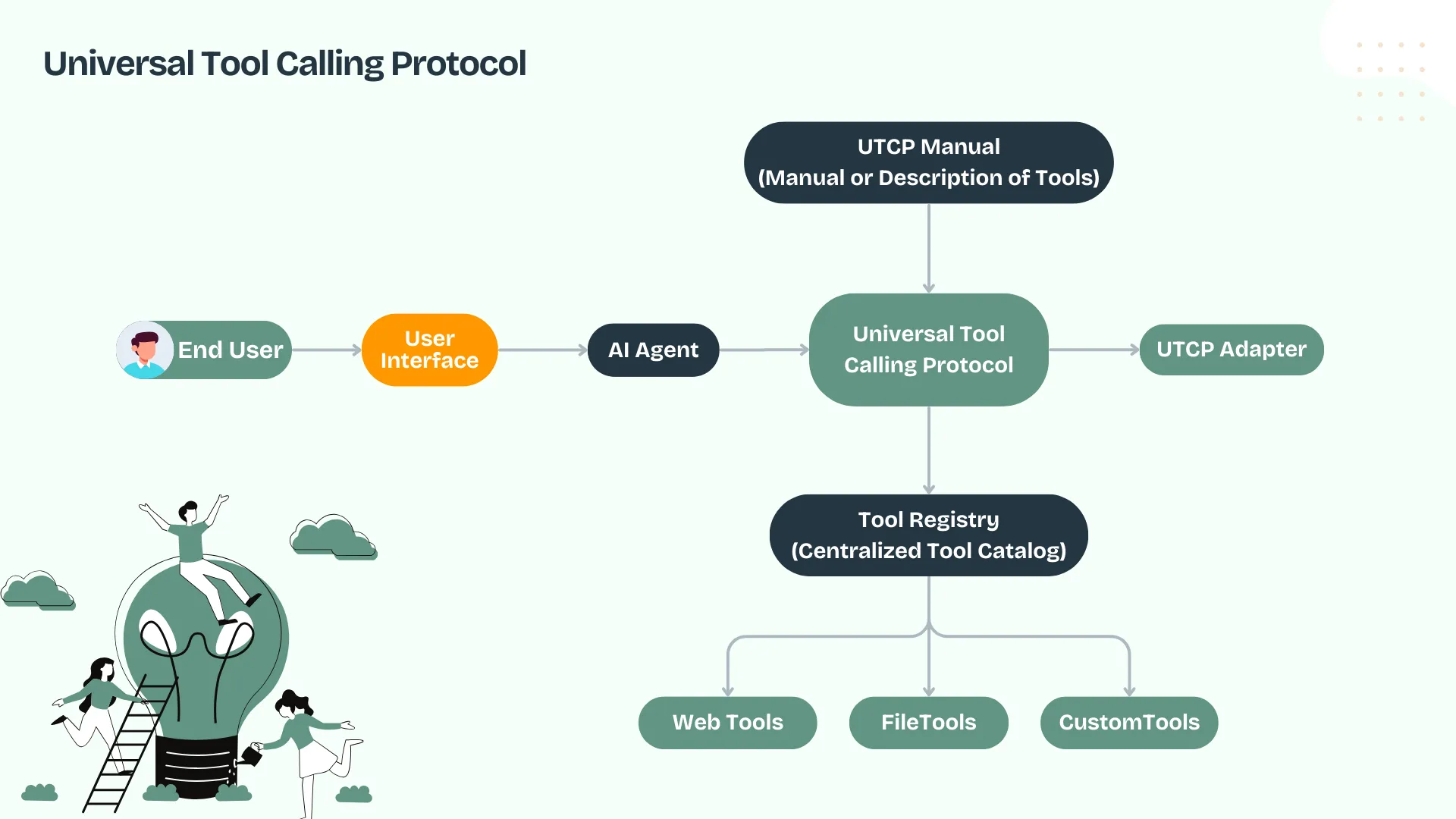

UTCP follows a “manual-based” approach where each tool publishes a lightweight JSON specification (a “manual”) that describes how to use it. AI agents discover these manuals and then communicate directly with tools using their native protocols.

Real-world analogy: Imagine each tool as a restaurant with its own menu posted outside. Customers (AI agents) read the menu to understand what’s available and how to order, then interact directly with the restaurant.

Core Architecture

Discovery: Agent → Tool Directory → [List of Tool Manuals]

Communication: Agent → Tool (direct, using tool's native protocol)The beauty of UTCP lies in its simplicity, there is no middleware, no proxy servers, no complex infrastructure. Just JSON specifications and direct communication.

How UTCP Works: Step by Step

Step 1: Tool Definition

Each tool publishes a JSON manual describing its capabilities:

{

"name": "email_sender",

"version": "1.2.0",

"description": "Send emails via SMTP with authentication",

"tool_call_template": {

"call_template_type": "http",

"url": "https://api.emailservice.com/v1/send",

"http_method": "POST",

"headers": {

"Content-Type": "application/json",

"Authorization": "Bearer ${EMAIL_API_TOKEN}"

},

"body_template": {

"to": "{{to}}",

"subject": "{{subject}}",

"body": "{{body}}",

"format": "{{format|default:text}}"

}

},

"parameters": {

"to": {

"type": "string",

"description": "Recipient email address",

"required": true

},

"subject": {

"type": "string",

"description": "Email subject line",

"required": true

},

"body": {

"type": "string",

"description": "Email content",

"required": true

},

"format": {

"type": "string",

"enum": ["text", "html"],

"description": "Email format",

"required": false

}

}

}Step 2: Tool Discovery

AI agents discover available tools by querying a discovery endpoint:

GET /utcp

Host: your-tools-server.com

Response:

{

"tools": [

"https://your-tools-server.com/tools/email-sender.json",

"https://your-tools-server.com/tools/database-query.json",

"https://your-tools-server.com/tools/file-upload.json"

]

}Step 3: Direct Tool Invocation

The agent reads the tool manual and makes direct calls:

import requests

import json

# Agent discovers and loads tool manual

tool_manual = requests.get("https://your-tools-server.com/tools/email-sender.json").json()

# Agent makes direct call based on manual

response = requests.post(

"https://api.emailservice.com/v1/send",

headers={

"Content-Type": "application/json",

"Authorization": f"Bearer {os.getenv('EMAIL_API_TOKEN')}"

},

json={

"to": "team@company.com",

"subject": "Weekly Sales Report",

"body": "This week's sales exceeded expectations...",

"format": "html"

}

)Why Use UTCP?

- Minimal Overhead: No wrapper servers to build or maintain

- Protocol Flexibility: Supports HTTP, SSE, WebSockets, CLI, gRPC, and more

- Performance: Direct calls reduce latency by 30–40% compared to MCP.

Model Context Protocol (MCP)

The Philosophy: Centralized Control

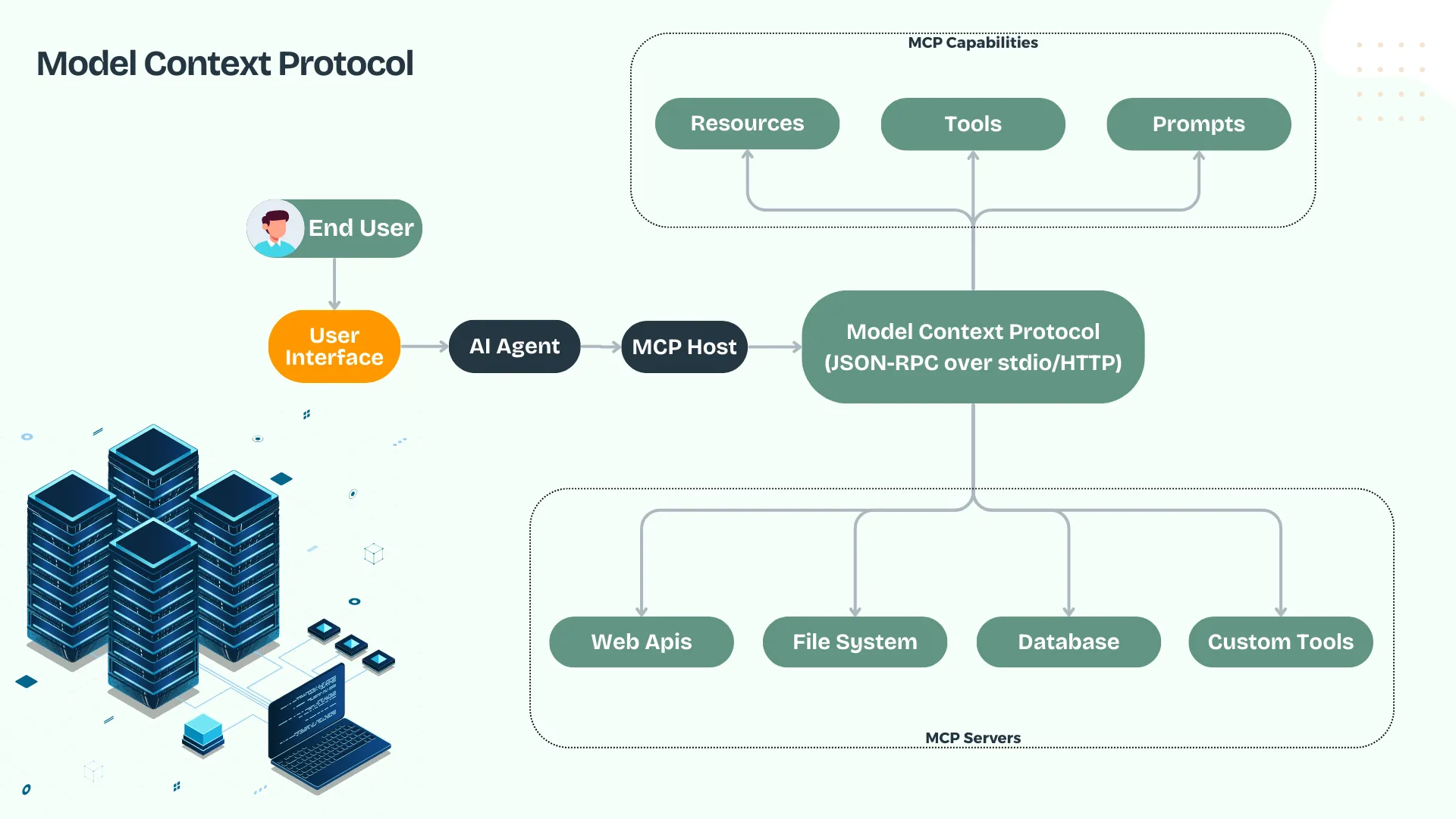

MCP uses a client-server architecture where all tool interactions flow through a central MCP server that acts as an intelligent proxy, session manager, and security gateway.

Real-world analogy: Think of MCP as a sophisticated concierge service. Instead of guests dealing directly with various hotel services, everything goes through the concierge who has relationships with all services, maintains context about your needs, and ensures consistent service quality.

Core Architecture

Discovery: Agent → MCP Server (tools/list)

Communication: Agent → MCP Server → Tool

Session: MCP Server maintains context and state

How MCP Works: Step by Step

Step 1: MCP Server Setup

An MCP server wraps multiple tools behind a unified JSON-RPC interface:

from mcp import Server, Tool

class EmailTool(Tool):

name = "send_email"

description = "Send emails with authentication and formatting"

def call(self, to: str, subject: str, body: str, format: str = "text"):

# Tool implementation

return self.email_service.send(to, subject, body, format)

class DatabaseTool(Tool):

name = "query_database"

description = "Execute SQL queries with proper sanitization"

def call(self, query: str, params: dict = None):

# Tool implementation with security checks

return self.db.execute_safe(query, params)

# Server hosts multiple tools

server = Server("business-tools-server")

server.add_tool(EmailTool())

server.add_tool(DatabaseTool())

server.add_tool(FileUploadTool())Step 2: Tool Discovery via JSON-RPC

Agents discover tools through standardized RPC calls:

// Request

{

"jsonrpc": "2.0",

"method": "tools/list",

"id": "discover-1"

}

// Response

{

"jsonrpc": "2.0",

"id": "discover-1",

"result": {

"tools": [

{

"name": "send_email",

"description": "Send emails with authentication and formatting",

"inputSchema": {

"type": "object",

"properties": {

"to": {"type": "string", "description": "Recipient email"},

"subject": {"type": "string", "description": "Email subject"},

"body": {"type": "string", "description": "Email content"},

"format": {"type": "string", "enum": ["text", "html"]}

},

"required": ["to", "subject", "body"]

}

}

]

}

}Step 3: Tool Invocation via JSON-RPC

All tool calls use the same JSON-RPC format:

// Request

{

"jsonrpc": "2.0",

"method": "tools/call",

"id": "call-1",

"params": {

"name": "send_email",

"arguments": {

"to": "team@company.com",

"subject": "Weekly Sales Report",

"body": "This week's sales exceeded expectations...",

"format": "html"

}

}

}

// Response

{

"jsonrpc": "2.0",

"id": "call-1",

"result": {

"content": [

{

"type": "text",

"text": "Email sent successfully to team@company.com"

}

]

}

}Why Use MCP?

- Centralized Control: All tools go through the MCP server

- Consistent Security Model: Authentication, TLS, and RBAC at one layer

- Session Management: Stateful context across multiple calls

Core Architecture & Design Philosophy

| Feature | UTCP | MCP |

|---|---|---|

| Architecture | Lightweight, stateless tool calling | Heavy client/server with state management |

| Primary Focus | Pure tool calling and execution | Tool calling + resource management + context extension |

| Design Philosophy | Simplicity and minimal protocol | Comprehensive context management system |

| Complexity Level | Low – simplified, minimal protocol | High – complex with additional abstractions |

| State Management | Stateless by design | Server-side state management |

| Protocol Scope | Function execution focused | Full context and resource management |

Technical Capabilities & Protocol Support

| Feature | UTCP | MCP |

|---|---|---|

| Provider Types | HTTP, WebSocket, CLI, gRPC, GraphQL, TCP, UDP, WebRTC, SSE | Primarily stdio and HTTP transports |

| Multi-protocol Support | Native support for 10+ protocols | Limited protocol support |

| Tool Discovery | External registries, automatic OpenAPI conversion | Built-in discovery through MCP servers |

| Legacy API Integration | Automatic OpenAPI conversion for existing APIs | Requires MCP server wrapper development |

| Interoperability | Wide protocol support, OpenAPI conversion | Limited to MCP-specific implementations |

| Error Handling | Standard HTTP/protocol error handling | MCP-specific error protocols |

Authentication & Security

| Feature | UTCP | MCP |

|---|---|---|

| Authentication Methods | API Key, Basic Auth, OAuth2 | OAuth2 with dynamic client registration |

| OAuth Setup Complexity | Automatic token handling, simplified | Complex dynamic client registration |

| Security Model | Standard web security practices | MCP-specific security protocols |

| Token Management | Automatic OAuth2 token refresh | Manual token management required |

| Authentication Ease | Simple configuration in JSON | Requires deep OAuth2 knowledge |

| Multi-auth Support | Multiple methods per provider | Limited to OAuth2 primarily |

Ecosystem & Support

| Feature | UTCP | MCP |

|---|---|---|

| Ecosystem Maturity | New, emerging standard | Established but criticized |

| Corporate Backing | Community-driven, no major sponsor | Anthropic-backed |

| Tool Availability | Growing through OpenAPI conversion | More existing tools and integrations |

| Standard Status | Open community specification | Anthropic-controlled standard |

| Industry Adoption | Early stage adoption | Wider adoption due to Anthropic support |

| Future Development | Community-driven roadmap | Anthropic development priorities |

Performance & Scalability

| Feature | UTCP | MCP |

|---|---|---|

| Scalability Design | Built for hundreds/thousands of tools | Limited scalability, performance concerns |

| Tool Search | Built-in semantic search with TagSearchStrategy | No built-in search capabilities |

| Performance | Optimized for large tool sets | Server lag issues reported by community |

| Resource Usage | Minimal overhead, stateless | Higher overhead due to state management |

| Concurrent Connections | Designed for high concurrency | Limited by server-side state |

| Memory Footprint | Low – no persistent state | Higher due to context management |

Developer Experience & Integration

| Feature | UTCP | MCP |

|---|---|---|

| Learning Curve | Low – familiar web technologies | High – MCP-specific concepts |

| Setup Complexity | Simple JSON configuration, minimal setup | Complex setup, requires specific SDK knowledge |

| Documentation Quality | Clear, example-driven documentation | Poor documentation (community criticism) |

| Integration Ease | Straightforward web-based integration | Requires understanding MCP abstractions |

| Debugging | Standard web debugging tools | MCP-specific debugging required |

| Community Feedback | Praised for simplicity | Criticized for complexity and frustration |

Advanced Features & Capabilities

| Feature | UTCP | MCP |

|---|---|---|

| Bidirectional Communication | Not supported (by design) | Tools can call back to LLMs |

| Resource Management | Not included | Virtual filesystem and resource concepts |

| Context Extension | Basic tool calling only | Rich context and resource management |

| File System Support | Through standard protocols | Built-in virtual filesystem |

| Streaming Support | Native SSE and HTTP streaming | Limited streaming capabilities |

| Real-time Features | WebSocket and WebRTC support | Basic real-time through bidirectional protocol |

Conclusion

Both UTCP and MCP are solving the critical challenge of connecting AI systems to external tools, but they represent different philosophical approaches:

UTCP represents the “direct and simple” philosophy, minimal overhead, maximum performance, and developer-friendly implementation. It’s ideal for teams that want to move fast, keep infrastructure simple, and maintain direct control over their tool integrations.

MCP represents the “controlled and governed” approach, centralized management, enterprise-grade security, and comprehensive audit capabilities. It’s designed for organizations that prioritize compliance, security, and centralized control over raw performance.

The choice isn’t just technical, it’s architectural and philosophical. Consider your team’s priorities, infrastructure preferences, and long-term needs when making this decision.

Need Expert Guidance?

Protocol choice impacts your entire AI architecture. At HyScaler, our AI specialists implement both UTCP and MCP across industries, from rapid startup deployments to enterprise frameworks.

We help: assess requirements, design solutions, implement production systems, train teams.

Ready to accelerate your AI development? Contact our experts for a customized protocol recommendation.