Table of Contents

Llama 3: A New Era in AI Development

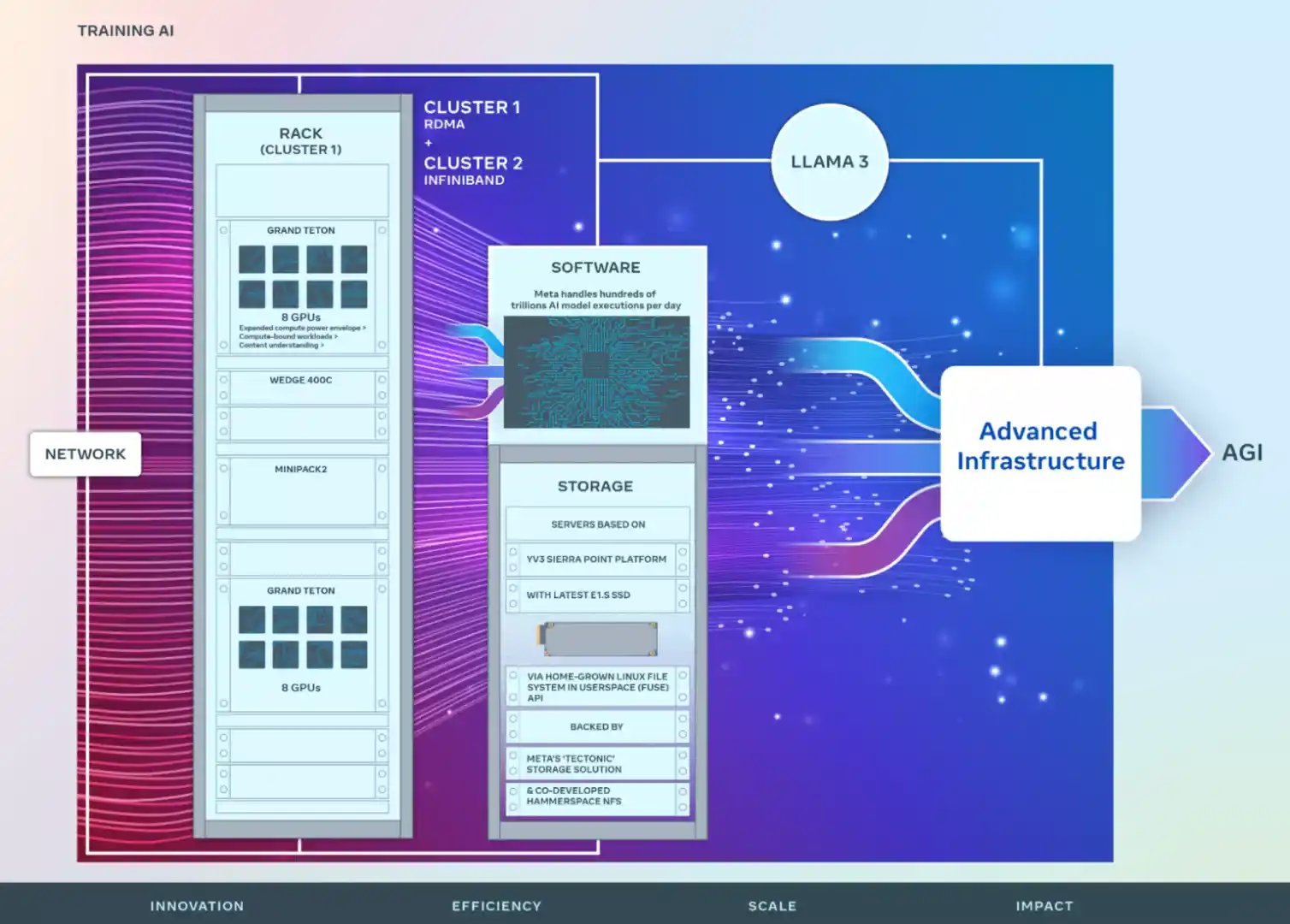

Meta, previously known as Facebook, has recently made headlines with its announcement of the introduction of two 24K GPU clusters. These clusters are a part of Meta’s ambitious plan to train its upcoming large language model, Llama 3. The clusters have been constructed using Grand Teton, Meta’s proprietary open GPU hardware platform, and Open Rack, Meta’s power and rack architecture. Additionally, they have been built on PyTorch, an open-source machine-learning library used for various applications, including computer vision and natural language processing.

The Role of Nvidia H100 GPUs in Llama 3’s Development

Meta has also disclosed its plans to expand its infrastructure and acquire 350,000 Nvidia H100 GPUs by the end of the year. Each of these GPUs costs approximately $40,000, making the total planned expenditure on GPUs a staggering $14 billion. This announcement comes a month after Facebook CEO Mark Zuckerberg posted a Reel on Instagram (also owned by Meta), stating that Meta’s AI roadmap necessitates a “massive compute infrastructure.”

Llama 3: The Centerpiece of Meta’s AI Strategy

Llama 3 is at the heart of Meta’s AI strategy. According to “R” Ray Wang, CEO of Constellation Research, Meta is striving to be at the forefront of AI infrastructure. Unlike other vendors aiming to provide enterprises with computing power, Meta focuses on sharing signals or insights. As Meta continues to develop its computing and software infrastructure, it is also giving away its Llama family of open-source large language models. This is because Meta is betting on organizations utilizing the generative AI technology to power products and services based on insights about consumer behavior shared on the largest social networks, all of which are owned by Meta.

The Role of AI Superclusters in Llama 3’s Development

The introduction of the new AI superclusters is a continuation of Meta’s history of building AI superclusters. In January 2022, Meta introduced its AI Research SuperCluster (RSC), a supercomputer designed to assist AI researchers in developing new and improved AI models. The two GPU clusters are a natural progression in this direction, as they will aid Meta in developing next-generation extreme-scale GenAI models.

Implications for Enterprises and the Challenges Ahead

GPU clusters may not have a direct effect on enterprises, but they hold significant appeal for those interested in leveraging open-source advancements. The cutting-edge developments Meta is pursuing, especially within the Llama portfolio, through the use of its latest AI supercluster, are set to establish a fundamental base. This base will not only foster innovation but will also offer tangible advantages to enterprises. These developments promise to enhance enterprise capabilities by providing access to powerful, scalable, and innovative AI technologies that are built upon an open-source framework.

However, Meta’s commitment to acquiring 350,000 Nvidia H100 GPUs presents several challenges. Not only will Meta require several megawatts of power capacity to power its cluster environments, but there are also potential limitations in terms of power capacity and supply. Furthermore, enterprises must not mistake plans like these from tech giants like Meta as a requirement to create their own GPU farms. For most enterprise-grade generative AI, existing CPU infrastructures can be used to solve small-scale models and enterprise problems.

In conclusion, Llama 3, Meta’s massive GPU clusters, and computers enable it to contribute to a broader open-source ecosystem. This move represents a significant step forward in the evolution of AI infrastructure and has the potential to shape the future of AI.