Table of Contents

Artificial intelligence has completely changed how we work, create, and solve problems.

But there’s a growing concern that threatens to undermine trust in these powerful systems: AI hallucinations.

When AI models confidently present false information as fact, the consequences can range from mildly embarrassing to professionally catastrophic.

You can witness some real-world cases in this blog ahead.

Understanding AI Hallucinations

AI hallucinations occur when artificial intelligence systems generate information that sounds plausible but is entirely fabricated or incorrect.

Unlike human hallucinations, AI hallucinations stem from the way language models are trained and how they predict responses based on patterns or data fed to them, rather than a true understanding.

Think of it this way: AI doesn’t “know” facts the way humans do.

Instead, it recognizes patterns in vast amounts of text data and predicts what words should come next.

Sometimes, these predictions lead to convincing-sounding nonsense.

Real-World Cases That Made Headlines

As mentioned here are some real-world cases of AI hallucinations that made headlines and highlight how AI hallucinations have affected the company and people involved.

The Lawyer Who Relied on ChatGPT

In 2023, New York lawyer Steven Schwartz faced sanctions after submitting legal briefs containing fake case citations generated by ChatGPT.

The AI had invented non-existent court cases complete with realistic-sounding names, citations, and judicial opinions.

The judge was not amused, and the case became a cautionary tale about blindly trusting AI-generated content.

Google’s Bard Mishap

During Google’s launch demonstration of Bard, their AI chatbot confidently stated that the James Webb Space Telescope took the first pictures of a planet outside our solar system.

This was incorrect; the first exoplanet image was captured years earlier.

The error wiped billions off Google’s market value in a single day, demonstrating the high stakes of AI hallucinations.

Air Canada’s Costly Chatbot

Air Canada’s chatbot hallucinated a bereavement fare policy that didn’t exist, promising a customer a discount.

When the airline refused to honor it, the case went to a tribunal, and Air Canada lost.

The company was held responsible for the false information its AI provided, setting a legal precedent for AI accountability.

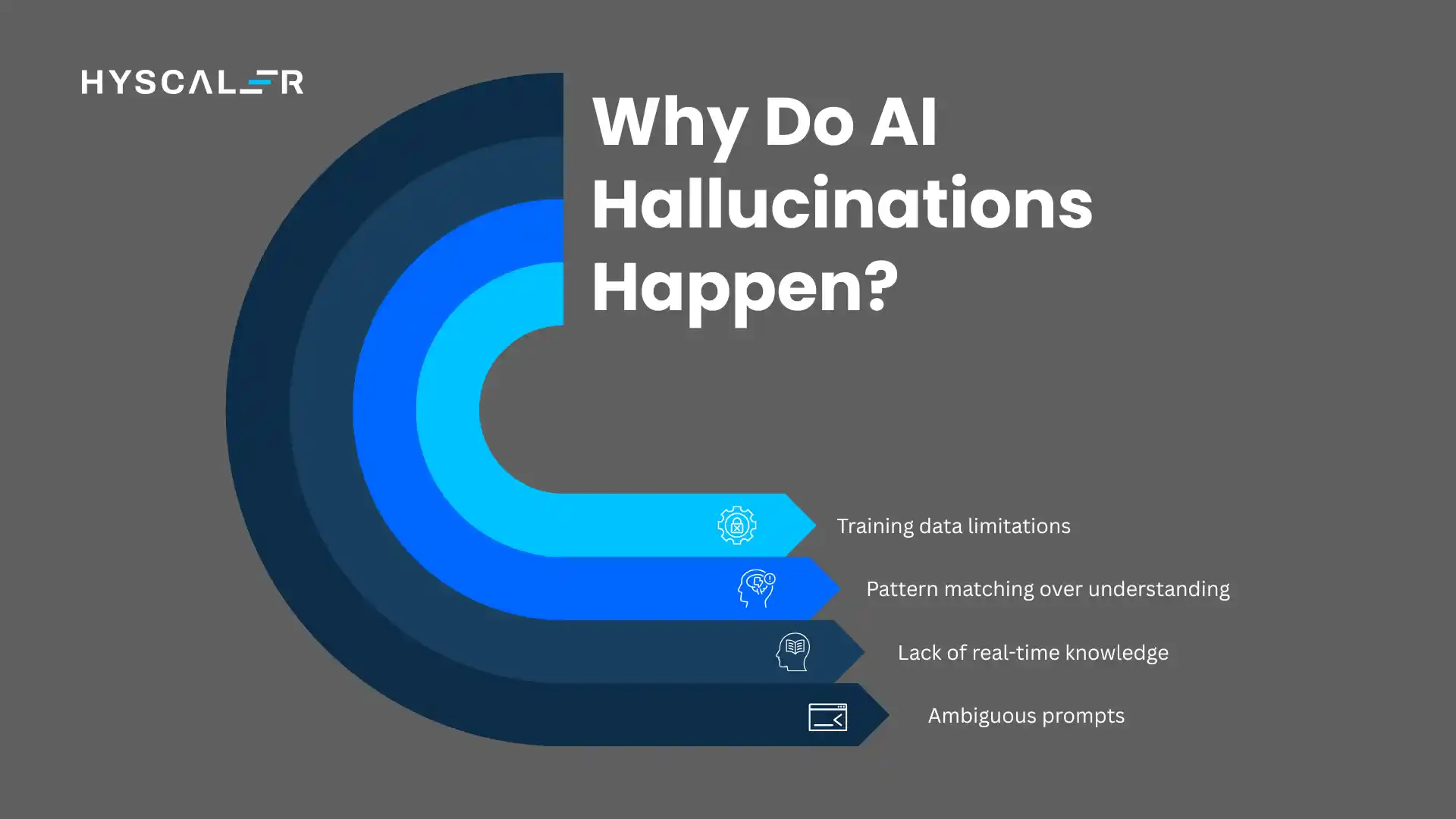

Why Do AI Hallucinations Happen?

Several factors contribute to AI hallucinations:

- Training data limitations mean AI models can only work with information they’ve seen before.

When faced with questions outside their training scope, they may fabricate answers rather than admit uncertainty. - Pattern matching over understanding is at the heart of how large language models work.

They excel at recognizing linguistic patterns but lack true comprehension, leading to confident-sounding errors. - Lack of real-time knowledge means most AI models operate on static training data.

They can’t verify facts against current information unless specifically designed to search external sources. - Ambiguous prompts can confuse AI systems, causing them to fill gaps with invented details rather than asking for clarification.

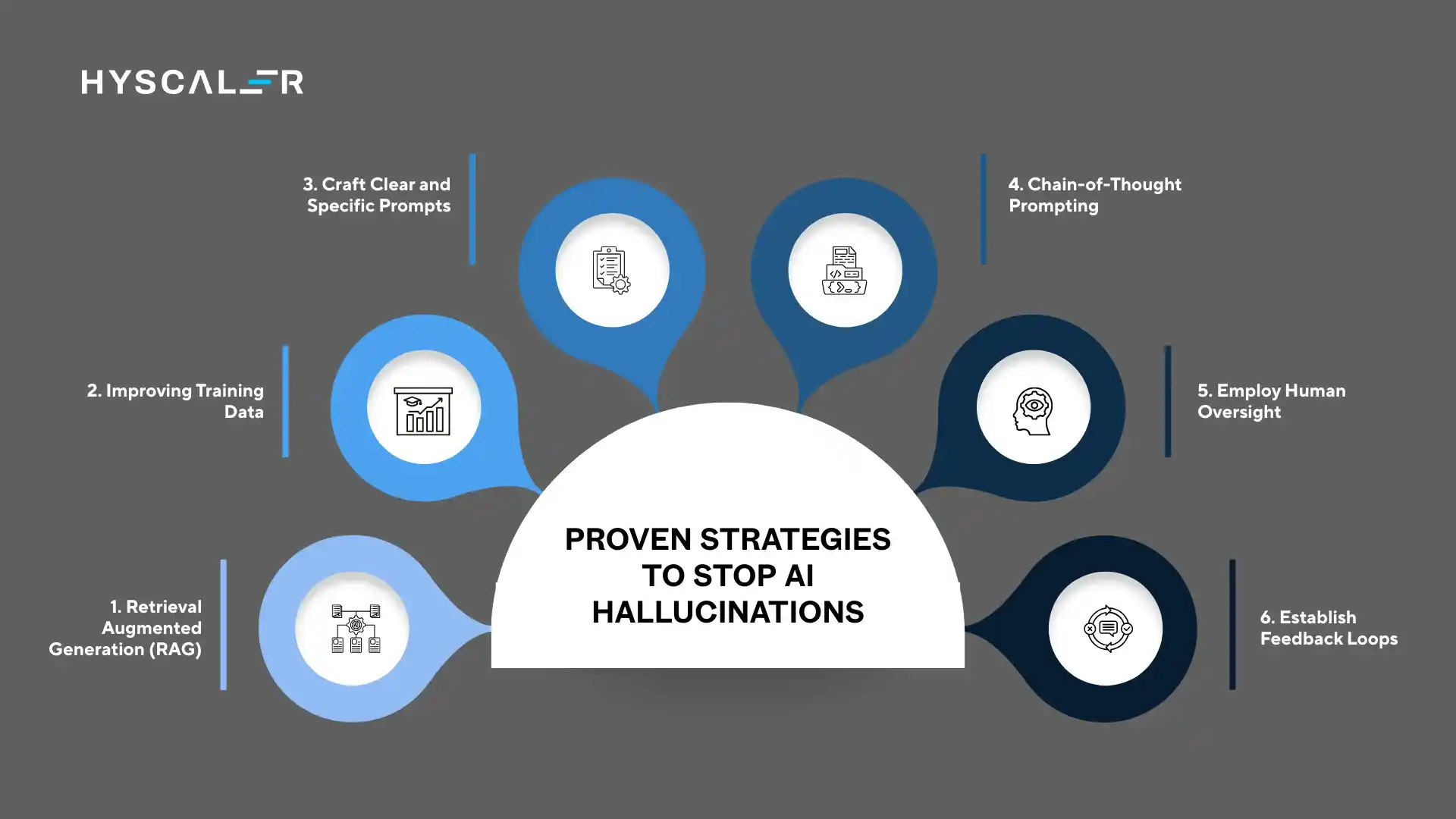

Proven Strategies to Stop AI Hallucinations

1. Retrieval Augmented Generation (RAG)

RAG represents one of the most effective architectural solutions for reducing AI hallucinations.

This approach combines the language generation capabilities of AI with real-time information retrieval from verified databases and knowledge bases.

Instead of relying solely on training data, RAG systems search through trusted external sources before generating responses, grounding answers in factual, current information.

This dramatically reduces the likelihood of fabricated content while ensuring responses remain relevant and accurate.

2. Improving Training Data

The quality of an AI model’s output directly correlates with the quality of its training data.

Organizations should focus on curating high-quality, diverse, and fact-checked datasets that represent accurate information.

This includes removing contradictory information, correcting errors in training materials, and ensuring balanced representation across different topics.

Regular audits of training data help identify and eliminate sources of potential hallucinations before they manifest in the model’s responses.

3. Craft Clear and Specific Prompts

The precision of your input has a significant impact on the accuracy of the AI output.

Vague or ambiguous prompts invite hallucinations because the model must make assumptions to fill information gaps.

Instead of asking “Tell me about the president,” specify “What were the major policy achievements of President Franklin D. Roosevelt’s first term from 1933-1937?” Include relevant context, timeframes, and specific details you’re seeking.

The more targeted and precise your prompt, the less room there is for the AI to wander into fabrication.

4. Chain-of-Thought Prompting

This advanced technique asks the AI to break down its reasoning process step-by-step before concluding.

By prompting the model to “think through this step by step” or “explain your reasoning,” you create a transparent path of logic that’s easier to verify.

Chain-of-thought prompting not only improves accuracy but also helps identify where the AI’s reasoning might go astray, making hallucinations more visible and easier to catch.

5. Employ Human Oversight

No AI system should operate without qualified human supervision, especially in critical applications.

Human experts bring contextual understanding, domain knowledge, and judgment that AI cannot replicate.

They can spot subtle inaccuracies, recognize when something sounds plausible but contradicts known facts, and provide the final quality check before AI-generated content reaches end users.

This is non-negotiable in fields like healthcare, law, finance, and journalism, where the data is most crucial.

6. Establish Feedback Loops

Creating systematic channels for users to report inaccuracies transforms your AI deployment into a continuously improving system.

When users flag hallucinations, this data should feed directly back into your refinement process.

Analyze patterns in reported errors to identify systematic problems, use this feedback to retrain models, and adjust prompts or system parameters accordingly.

Feedback loops turn every user interaction into an opportunity for improvement and reduce AI hallucinations.

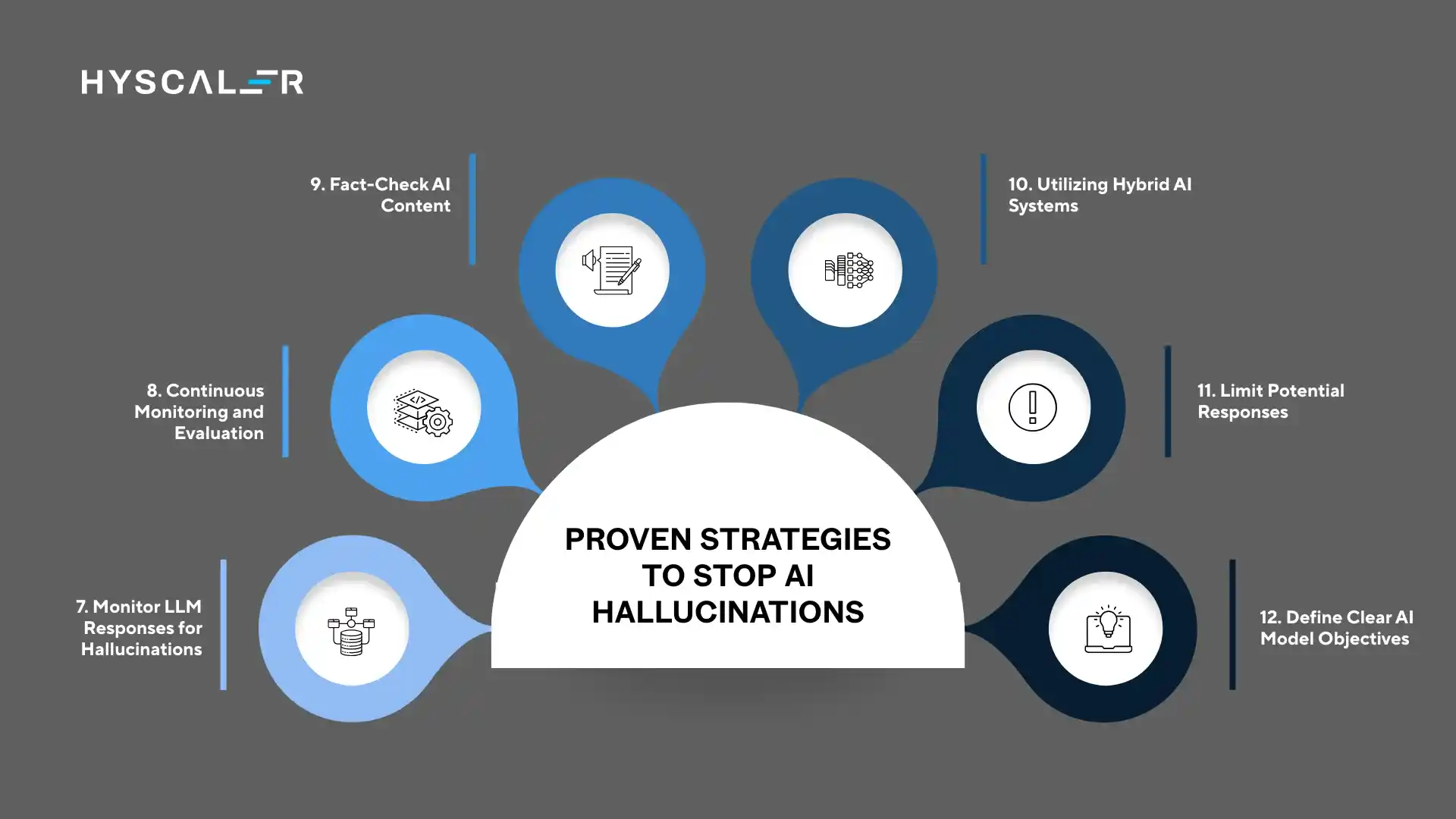

7. Monitor LLM Responses for Hallucinations

Implement automated monitoring systems that continuously scan AI outputs for common hallucination patterns.

These systems can flag responses that lack source attribution, contain suspiciously specific details that seem fabricated, or contradict known information in your knowledge base.

Real-time monitoring allows you to catch problematic outputs before they reach users and provides valuable data for ongoing system refinement.

8. Continuous Monitoring and Evaluation

Beyond catching individual hallucinations, establish comprehensive evaluation frameworks that regularly test your AI system’s performance across diverse scenarios.

Create benchmark datasets with known correct answers, measure accuracy rates over time, and track hallucination frequency by category.

This ongoing assessment helps you understand whether changes to your system actually improve performance and where vulnerabilities persist.

9. Fact-Check AI Content

Never publish or act on AI-generated information without rigorous fact-checking.

Cross-reference claims against authoritative sources, verify statistics and quotes, and confirm that cited references actually exist and say what the AI claims they say.

This is especially critical for factual claims, statistics, quotations, legal or medical information, and historical events.

Think of AI as a research assistant, not a fact authority.

10. Utilizing Hybrid AI Systems

Hybrid systems combine multiple AI technologies to leverage their complementary strengths while compensating for individual weaknesses.

For example, pairing a large language model with a knowledge graph creates a system where structured factual data constrains and verifies free-form text generation.

Similarly, combining rule-based systems with neural networks ensures that hard rules around factual accuracy override the statistical predictions that might lead to hallucinations.

11. Limit Potential Responses

Constraining the AI’s output space dramatically reduces hallucination opportunities.

For customer service applications, limit responses to pre-approved templates or verified knowledge base articles.

For data extraction tasks, restrict outputs to specific formats or predetermined categories.

When asking AI to make recommendations, provide it with a defined list of valid options rather than allowing open-ended generation. These boundaries act as guardrails that prevent the model from fabricating information.

12. Define Clear AI Model Objectives

Being vague in what you’re asking an AI system to accomplish creates space for hallucinations.

Before deployment, explicitly define what success looks like: What questions should the AI answer?

What types of information should it provide?

What should it explicitly refuse to do?

Clear objectives allow you to design prompts, select training data, and structure outputs in ways that align with these goals, reducing the likelihood of off-target responses that require fabrication.

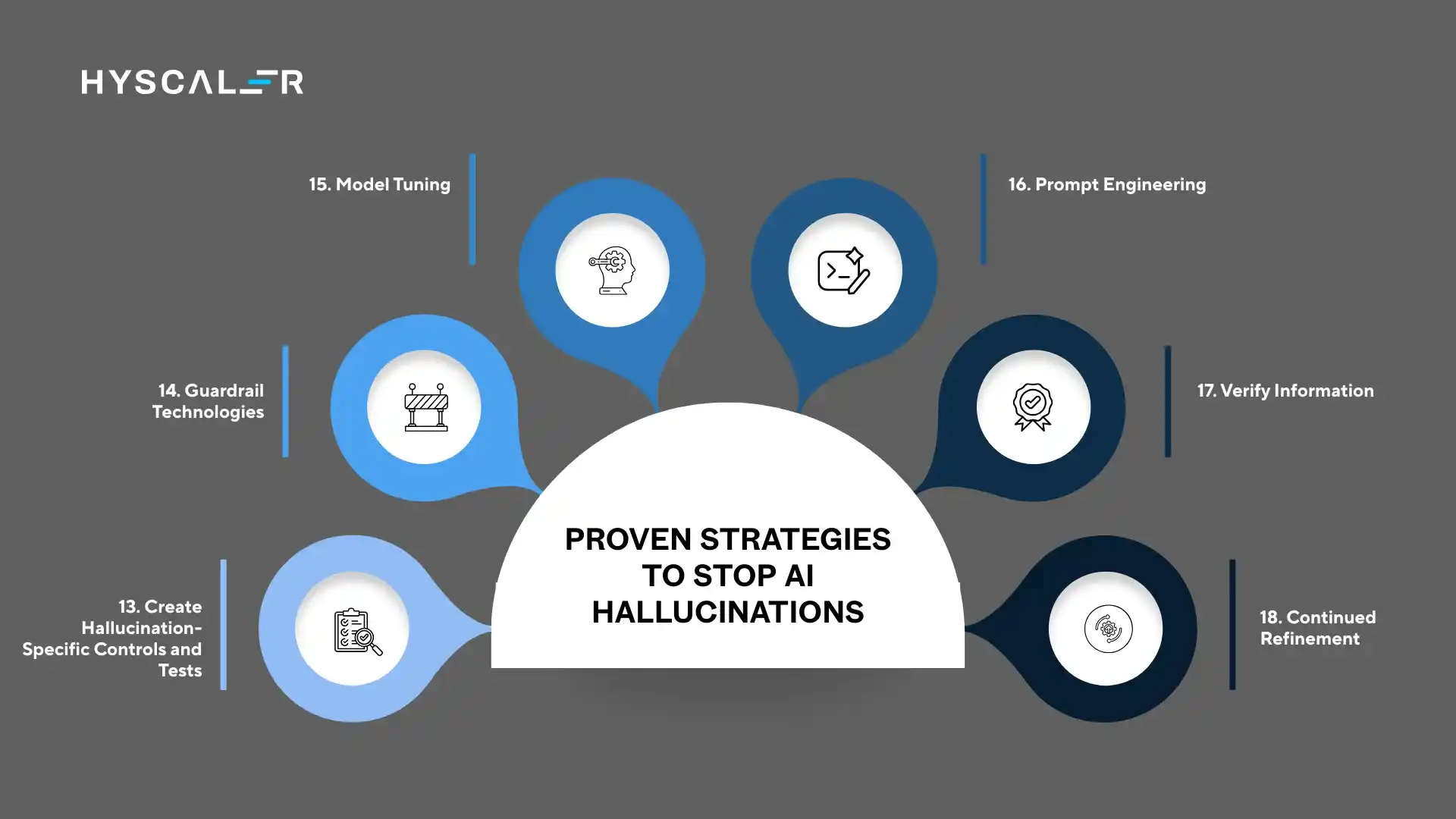

13. Create Hallucination-Specific Controls and Tests

Develop targeted testing protocols designed specifically to identify hallucination vulnerabilities.

This includes adversarial testing, where you deliberately ask questions designed to trigger hallucinations, edge case testing with unusual or ambiguous queries, and consistency testing, where you ask the same question multiple ways to see if responses contradict each other.

Document these tests and run them regularly, especially after model updates or system changes.

14. Guardrail Technologies

Implement technical guardrails that act as safety nets around your AI system.

These include confidence threshold filters that flag or block responses when the model shows low certainty, semantic consistency checkers that compare new outputs against established knowledge bases, and content filters that prevent the AI from generating information in high-risk domains where it lacks expertise.

Guardrails create multiple layers of protection between potential AI hallucinations and end users.

15. Model Tuning

Fine-tuning pre-trained models on domain-specific, high-quality datasets significantly improves accuracy and reduces hallucinations in specialized applications.

Rather than relying on a general-purpose model’s broad but shallow knowledge, tuning creates an AI that deeply understands your particular domain.

This process involves training the model on verified examples from your field, teaching it the specific terminology, relationships, and facts relevant to your use case while maintaining its general language capabilities.

16. Prompt Engineering

The art and science of prompt engineering goes beyond simply writing clear questions.

It involves understanding how different prompt structures, examples, and constraints affect model behavior.

Techniques include providing few-shot examples showing the desired output format, explicitly instructing the model to cite sources, using negative prompts that tell the AI what not to do, and structuring prompts with clear sections for context, task, and constraints.

Sophisticated prompt engineering can dramatically reduce hallucination rates without changing the underlying model.

17. Verify Information

Make verification a standard operating procedure, not an afterthought.

Develop checklists specific to your use case: For legal applications, verify case citations actually exist and are accurately described.

For medical information, confirm recommendations align with current clinical guidelines.

For historical claims, check against multiple authoritative sources.

Create workflows that require verification as a step before any AI output proceeds, and document your verification process for accountability.

18. Continued Refinement

Stopping AI hallucinations isn’t a one-time fix but an ongoing commitment to improvement. Regularly review hallucination incidents, analyze what went wrong, and implement systemic fixes.

Update your prompts based on what works, expand your knowledge bases to cover gaps where hallucinations occur, and retrain models with better data when patterns emerge.

The most successful AI deployments treat refinement as a continuous cycle of testing, learning, and improving.

Building a Comprehensive Anti-Hallucination Strategy

The most effective approach combines multiple strategies from this guide.

Start with architectural solutions like RAG and hybrid systems that fundamentally reduce hallucination potential.

Layer on operational practices like human oversight and fact-checking.

Support everything with continuous monitoring, feedback loops, and refinement processes.

Remember that different applications require different emphasis.

A customer service chatbot might prioritize limiting responses and guardrail technologies, while a research assistant needs stronger emphasis on retrieval augmentation and citation verification.

Tailor your anti-hallucination strategy to your specific use case and risk tolerance.

Conclusion

AI hallucinations represent a significant but manageable challenge.

The combination of better architecture, rigorous processes, and continuous improvement creates systems that are remarkably useful while remaining trustworthy.

Organizations that invest in these safeguards don’t just protect themselves from costly errors; they build competitive advantages through AI systems that users can actually rely on.

- The lawyer who trusted ChatGPT learned this lesson the hard way.

- Air Canada paid the price for their chatbot’s fabrications.

- Google’s stock suffered from Bard’s error.

These cautionary tales remind us that AI hallucinations have real consequences, but they also show us exactly what to avoid.

By implementing these eighteen strategies, you create multiple lines of defense against AI hallucinations.

No single approach is perfect, but together they form a robust framework for trustworthy AI deployment.

The future belongs to those who can harness AI’s power while maintaining the human judgment, verification, and oversight that keep these systems honest.

Stay vigilant, verify everything important, and never let impressive-sounding AI output replace critical thinking.

That’s how we move forward together, humans and AI, each contributing what they do best.

To get such services, contact HyScaler.

What Are the Three Types of Auditory Hallucinations?

While we’re discussing hallucinations, it’s worth understanding the human experience, which differs fundamentally from AI hallucinations.

In psychology, auditory hallucinations, hearing sounds or voices that aren’t present, are classified into three main types:

1. Elementary hallucinations involve simple sounds like ringing, buzzing, humming, or whistling.

These are non-verbal acoustic experiences that seem to come from outside the person’s head.

2. Complex hallucinations involve hearing voices, music, or other elaborate sounds.

These are the most commonly portrayed types in media and can range from hearing your name called to full conversations.

3. Functional hallucinations are triggered by real external sounds but involve hearing something different from the actual stimulus.

For example, hearing voices when a fan is running, where the fan’s sound triggers the hallucinatory experience.

Understanding human hallucinations highlights an important distinction: humans experiencing hallucinations often don’t realize they’re false, while AI systems don’t “realize” anything at all; they’re simply generating plausible-sounding text without any awareness of truth or falsehood.

How often do AI hallucinations occur?

It is estimated that AI can hallucinate from 30% to 60% of the time, where most hallucinations occur during maths calculations.

But for a narrower and well-grounded task, it is from 0 to 20%.