Table of Contents

Introduction

As we step into 2024, the realm of machine learning continues to expand, offering myriad opportunities for beginners to dive into this fascinating field. One of the most crucial steps in learning machine learning is understanding and choosing the right algorithms. This blog will guide you through the best machine learning algorithms for beginners, helping you to start your journey on the right foot.

What is a Machine Learning Algorithm?

A machine learning algorithm is a method that allows computers to learn from and make predictions based on data. These algorithms analyze input data, find patterns, and use these patterns to make decisions or predictions on new data. They are the backbone of machine learning, enabling systems to improve and adapt without being explicitly programmed for each task.

Types of Machine Learning Algorithms

Machine learning algorithms can be categorized into four primary types: supervised, unsupervised, semi-supervised, and reinforcement learning.

Supervised Learning Algorithms

Supervised learning algorithms are trained using labeled data, where the input data is paired with the correct output. The goal is to learn a mapping from inputs to outputs, enabling the algorithm to predict outputs for new, unseen inputs. Common supervised learning algorithms include:

- Decision Trees

- Support Vector Machines (SVM)

- Random Forests

- Naive Bayes

Unsupervised Learning Algorithms

Unsupervised learning algorithms work with unlabeled data, aiming to discover hidden patterns or intrinsic structures. These algorithms are often used for clustering and dimensionality reduction. Popular techniques include:

- K-means Clustering

- Hierarchical Clustering

- Principal Component Analysis (PCA)

- t-Distributed Stochastic Neighbor Embedding (t-SNE)

Semi-supervised Learning

Semi-supervised learning is a blend of supervised and unsupervised learning. It uses a small amount of labeled data along with a larger set of unlabeled data to improve learning accuracy. This approach is particularly useful when labeling data is costly or time-consuming.

Reinforcement Learning

Reinforcement learning involves training an agent to make a sequence of decisions by interacting with an environment. The agent receives rewards or penalties based on its actions and learns to maximize cumulative rewards over time. This type of learning is widely used in robotics, game-playing, and autonomous systems.

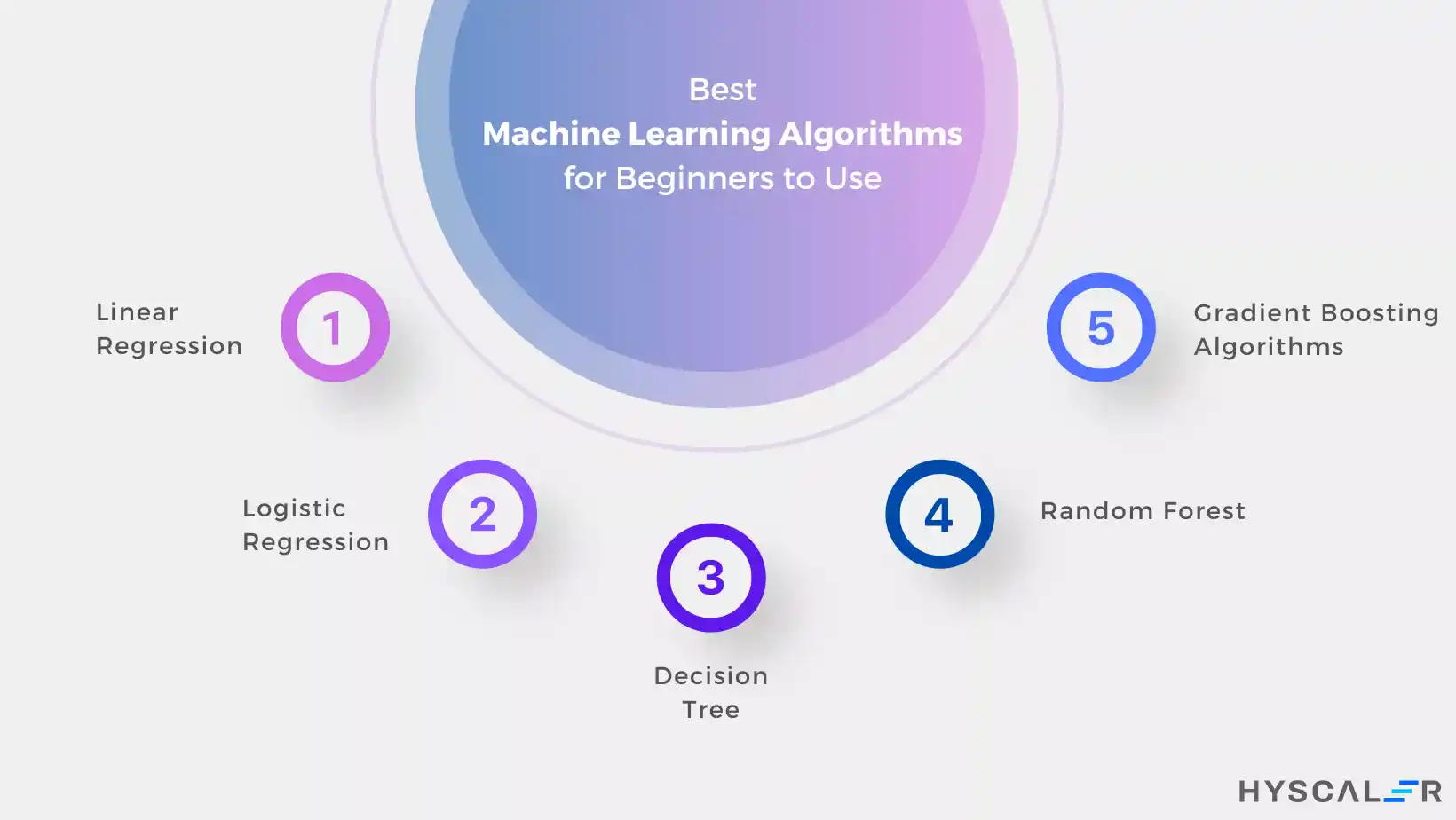

5 Best Machine Learning Algorithms for Beginners to Use in 2024

Here is the top machine learning algorithm. It is difficult to choose only five out of the other algorithms, but these 5 are the best machine learning algorithms to use in 2024:

1. Linear Regression

Use Case: Predicting a continuous dependent variable based on one or more independent variables. Linear regression is commonly used for tasks such as predicting house prices, stock prices, and sales forecasting. It establishes a relationship between the dependent variable (Y) and one or more independent variables (X) using a best-fit straight line (regression line).

Advantages:

- Simplicity: Linear regression is straightforward to implement and understand, making it ideal for beginners.

- Interpretability: The model’s coefficients provide direct insight into the relationship between variables, helping to identify which factors are most influential.

- Efficiency with Small Datasets: It performs well with small datasets and can quickly yield meaningful insights.

- Good for Relationships: Useful for understanding the strength and type (positive or negative) of relationships between variables.

Limitations:

- Assumption of Linearity: It assumes a linear relationship between the dependent and independent variables, which may not always be the case.

- Sensitivity to Outliers: Outliers can significantly skew the results, leading to inaccurate predictions.

- Collinearity: A high correlation between independent variables can reduce the model’s accuracy.

2. Logistic Regression

Use Case: Binary classification problems. Logistic regression is used for tasks such as spam detection, disease diagnosis (e.g., predicting the presence or absence of a disease), and customer churn prediction. It estimates the probability that a given input point belongs to a certain class (binary outcomes).

Advantages:

- Simplicity: Easy to implement and understand, similar to linear regression.

- Probabilistic Interpretation: Provides probabilities for classifications, offering a measure of confidence in the predictions.

- Regularization: Techniques like L1 and L2 regularization can be applied to avoid overfitting.

- Versatility: Can be extended to multiclass classification problems through techniques like one-vs-rest (OvR).

Limitations:

- Linear Relationship Assumption: Assumes a linear relationship between the independent variables and the log odds of the dependent variable.

- Limited to Binary Output: Direct application is limited to binary classification, requiring modifications for multiclass problems.

- Performance with Complex Relationships: May not perform well when the relationship between variables is highly complex and non-linear.

3. Decision Tree

Use Case: Both classification and regression problems. Decision trees are used in scenarios like customer segmentation, credit scoring, and risk assessment. They work by splitting the data into subsets based on feature values, resulting in a tree-like model of decisions.

Advantages:

- Interpretability: The tree structure is easy to visualize and interpret, making it accessible for non-experts.

- Handling Different Data Types: Can handle both numerical and categorical data without the need for data scaling.

- Non-parametric Nature: No assumptions about the distribution of the data, making it flexible in handling various types of data.

- Feature Importance: Provides insights into the most important features for prediction.

Limitations:

- Overfitting: Can easily overfit the training data, leading to poor generalization to new data.

- Sensitivity to Noisy Data: Small changes in the data can result in completely different tree structures.

- Complexity with Large Trees: Large trees can become complex and less interpretable.

4. Random Forest

Use Case: Both classification and regression problems. Random forests are widely used in applications like fraud detection, medical diagnosis, and stock market prediction. This ensemble method builds multiple decision trees and merges them to get a more accurate and stable prediction.

Advantages:

- Reduction in Overfitting: By averaging the results of multiple trees, random forests reduce the risk of overfitting.

- Robustness: Handles large datasets and high-dimensional data effectively.

- Feature Importance: Provides estimates of feature importance, helping to identify the most influential variables.

- Versatility: Can be used for both classification and regression tasks with high accuracy.

Limitations:

- Computational Intensity: Requires more computational power and memory, especially with a large number of trees.

- Less Interpretability: While the ensemble approach improves accuracy, it makes the model less interpretable compared to a single decision tree.

- Training Time: Can be slower to train compared to simpler models.

5. Gradient Boosting Algorithms (including AdaBoost)

Use Case: Both classification and regression problems. Gradient boosting algorithms, such as XGBoost and AdaBoost, are used in applications like predictive analytics, recommendation systems, and winning Kaggle competitions. These algorithms build models sequentially, with each new model correcting the errors of its predecessor.

Advantages:

- High Performance: Often outperforms other models by combining multiple weak learners to form a strong learner.

- Flexibility: Can handle various types of data and problems (classification, regression).

- Feature Importance: Offers insights into feature importance, similar to random forests.

- Handling Complex Patterns: Effective at capturing complex relationships and interactions within the data.

Limitations:

- Overfitting Risk: Can overfit if the number of boosting rounds is too large or if the learning rate is not properly tuned.

- Computational Cost: More computationally intensive and requires careful parameter tuning.

- Longer Training Times: Training can be time-consuming, especially with large datasets.

Conclusion

Choosing the right machine learning algorithm is a critical step in your journey into machine learning. The five algorithms discussed here—Linear Regression, Logistic Regression, Decision Tree, Random Forest, and Gradient Boosting—are excellent starting points for beginners in 2024. Each offers unique strengths and can be applied to a variety of tasks, helping you build a solid foundation in machine learning.

Related Article

Machine Learning in Application Development: A Step-By-Step Guide 2024

FAQs

1. What is an algorithm in Machine Learning?

A machine learning algorithm is a technique based on statistical concepts that enables computers to learn from data, discover patterns, make predictions, or complete tasks without explicit programming. These algorithms are broadly classified into supervised learning, unsupervised learning, and reinforcement learning.

2. What are types of Machine Learning?

There are mainly three types of machine learning:

- Supervised Learning

- Unsupervised Learning

- Reinforcement Learning

3. Which ML algorithm is best for prediction?

The ideal machine learning algorithm for prediction depends on various factors, including the nature of the problem, the type of data, and specific requirements. Popular algorithms for prediction tasks include Support Vector Machines (SVM), Random Forests, and gradient-boosting methods. The best algorithm should be chosen based on testing and evaluation for the specific problem and dataset.

4. What are the 10 popular and best Machine Learning Algorithms?

Here is a list of the top 10 commonly used and best machine learning algorithms:

- Linear Regression

- Logistic Regression

- SVM (Support Vector Machine)

- kNN (K-Nearest Neighbors)

- Decision Tree

- Random Forest

- Naive Bayes

- PCA (Principal Component Analysis)

- Apriori algorithms

- K-Means Clustering