Table of Contents

In the world of Natural Language Processing (NLP), a critical challenge exists: reference resolution. This refers to the ability to understand the meaning of words like “it” or “that” within a conversation, by linking them to the things they refer to. Context is key – it could be something previously mentioned in the conversation, or even something visual on the user’s screen.

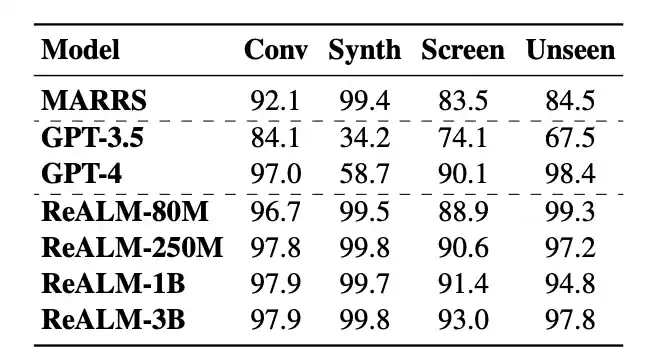

Traditionally, large language models (LLMs) have struggled with resolving references, especially for non-conversational elements like objects on a screen. Existing solutions, such as MARRS, rely on complex models that require significant computing power, limiting their practicality.

Apple’s Breakthrough: ReALM

Apple researchers have introduced a revolutionary approach called Reference Resolution As Language Modeling (ReALM). This innovative system tackles the challenge by reconstructing the user’s screen using parsed entities and their locations.

Here’s how it works:

- Understanding the Screen: It analyzes the user’s screen, identifying elements like buttons, text boxes, and images.

- Building a Textual Representation: These elements are then converted into a textual description, essentially creating a “textual snapshot” of the screen.

- Context for the LLM: This textual representation is fed into a large language model (LLM) which can then understand the context of references made in conversation relative to the user’s screen.

This unique approach offers several advantages:

- Lighter and Faster: Unlike vision-based models, ReALM relies solely on text, making it significantly lighter and faster to run.

- Superior Performance: Despite its reduced complexity, it outperforms previous models like MARRS and even achieves performance comparable to the state-of-the-art GPT-4 model, on tasks involving screen references.

- Real-World Applications: This technology has the potential to revolutionize how virtual assistants and chatbots understand user intent. Imagine a scenario where you ask your assistant, “Can you call that business?” while looking at a website on your phone. The ReALM would be able to understand “that business” refers to the phone number displayed on the website and initiate the call.

The Future of Reference Resolution

Apple’s ReALM marks a significant leap forward in the field of reference resolution. By leveraging the power of LLMs and innovative textual representation, it paves the way for lighter, faster, and more accurate AI assistants that can truly understand the context of user interactions, both within conversations and on their screens.

Beyond Screens: ReALM’s Potential for Conversational AI

While ReALM’s ability to understand on-screen references is impressive, its applications extend beyond the confines of your device’s display. Here’s how this technology can transform the way we interact with AI assistants:

- Enhanced Natural Language Understanding: By incorporating the user’s environment through textual representation, ReALM can provide a more comprehensive understanding of conversational context. Imagine having a conversation with a virtual assistant about a restaurant you just saw on your phone. It could potentially understand references like “that place with the patio” or “the Italian restaurant we just looked at” by accessing your browsing history and generating a relevant textual representation.

- Improved Task Completion: The ability to understand references to physical objects in the real world can significantly improve the capabilities of AI assistants. For instance, you could ask your assistant to “turn off the light by the window” or “adjust the thermostat over there,” with it interpreting these references based on environmental sensors or smart home integrations.

- Personalized User Experiences: Its ability to understand context opens doors for highly personalized user experiences. Imagine an AI assistant that can suggest relevant actions or information based on what you’re currently looking at. For example, if you’re browsing a news article about a sporting event, ReALM could suggest purchasing tickets or setting a reminder to watch the game.

Challenges and the Road Ahead

While ReALM presents exciting possibilities, some challenges remain:

- Privacy Concerns: The ability to access and interpret on-screen content raises privacy concerns. Apple will need to ensure that user data is protected and users have control over how ReALM utilizes information.

- Integration with Existing Systems: For ReALM to reach its full potential, seamless integration with existing AI systems and virtual assistants is crucial.

- Malleable and Dynamic Environments: The real world can be messy and unpredictable. ReALM’s ability to interpret references in constantly changing environments will need further development.

Despite these challenges, Apple’s ReALM represents a significant step forward in reference resolution technology. With continued research and development, ReALM has the potential to transform the way we interact with AI, ushering in a new era of intuitive, context-aware, and truly intelligent virtual assistants.