Table of Contents

Meta, Microsoft, and OpenAI will use AMD GPUs. This is a big deal in AI tech. Meta picks AMD’s MI300X for AI work, OpenAI for Triton 3.0, and Microsoft for a new computer series called Azure ND MI300x v5. Even Oracle wants to use AMD’s MI300X for its upcoming AI service. This shows how important AMD GPUs are becoming in AI.

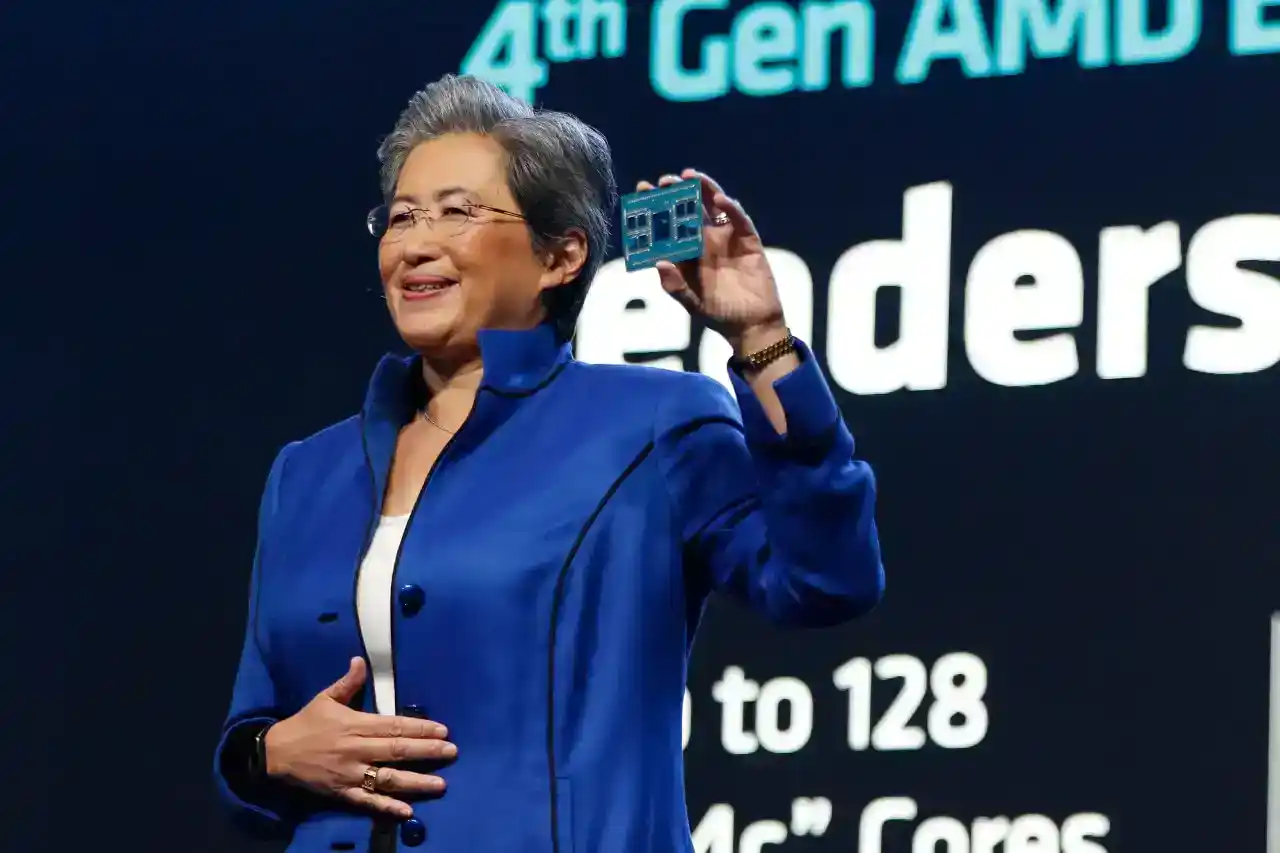

AMD AI Event

Meta, OpenAI, and Microsoft announced plans during an AMD investor event to use AMD’s new AI chip, the Instinct MI300X. This chip marks a significant move away from costly Nvidia graphics processors that were crucial for AI programs like OpenAI’s ChatGPT. The decision signals a big change in technology companies’ search for different options. These new AMD GPUs are seen as alternatives and are gaining attention for their potential in creating and running artificial intelligence programs, hinting at a shift from the previously relied-upon Nvidia GPUs due to their expense.

When AMD’s new powerful chip arrives next year, tech and cloud companies might use it to create and run AI models. If they do, it could make making AI models cheaper and challenge Nvidia’s growing sales of AI chips. This new AMD GPU might be a game-changer for AI development, potentially reducing costs and giving Nvidia some competition in the AI chip market.

On Wednesday, AMD CEO Lisa Su stated that the focus lies primarily on large-scale hardware such as powerful processors and graphics processing units (GPUs) for cloud computing.

AMD GPUs’ Leap Forward: Unveiling the MI300X and HBM3 Advancements

AMD’s new MI300X uses a fresh design that can boost performance. The standout feature is its 192GB of super-fast memory called HBM3. This memory type speeds up data transfer and accommodates bigger AI models. The AMD GPUs are built on this new architecture, often bringing better performance. With its high-tech memory, these GPUs can handle large amounts of data swiftly, making them ideal for running complex AI models.

Su conducted a direct comparison between the MI300X and the systems constructed using it, and Nvidia’s primary AI GPU, the H100.

Su stated that the performance of this system results in an enhanced user experience. As queries become more intricate, the model must provide prompt responses, which is precisely what this performance achieves.

AMD’s primary concern revolves around whether businesses that have relied on Nvidia for their GPU needs will be willing to allocate resources and effort toward incorporating another GPU provider. According to Su, embracing AMD requires a significant amount of effort.

AMD recently shared updates about their software, ROCm, aiming to rival Nvidia’s CUDA software. This improvement addresses a significant gap that previously led many AI developers to prefer Nvidia, potentially making AMD GPUs a more competitive choice for them.

Price will also play a crucial role. On Wednesday, AMD did not disclose the pricing for the MI300X. However, Nvidia’s chips can be priced at approximately $40,000 per chip. Su informed reporters that to convince customers to make a purchase, AMD GPUs would need to be priced lower than Nvidia’s, both in terms of initial purchase cost and operational expenses.

Who claims that they will utilize the MI300X?

AMD announced on Wednesday that they’ve teamed up with companies needing GPUs. Meta and Microsoft, big buyers of Nvidia H100 GPUs in 2023, as per a report by Omidia, are now joining hands with AMD for their GPUs.

Meta has announced that it will employ MI300X GPUs to handle AI inference tasks, including the processing of AI stickers, image editing, and operating its assistant.

Kevin Scott, the Chief Technology Officer of Microsoft, announced that the Azure web service will provide access to MI300X chips. Additionally, Oracle’s cloud platform will also utilize these chips.

OpenAI announced its commitment to include AMD GPUs in Triton, one of its software offerings. Triton, although not a massive language model like GPT, is utilized in AI research to leverage chip capabilities.

AMD’s sales forecast for the chip does not indicate a significant surge, as they are projecting approximately $2 billion in total revenue from data center GPUs by 2024. In contrast, Nvidia’s data center sales in the most recent quarter alone exceeded $14 billion, although this figure encompasses chips other than GPUs.

Nevertheless, AMD has projected that the market for AMD GPUs could reach a staggering $400 billion within the next four years, which is twice the company’s previous estimation. This demonstrates the immense level of anticipation and the increasing demand for high-performance AI chips. Consequently, AMD is now redirecting investor focus toward its product line to capitalize on this lucrative opportunity.

Su also indicated to journalists that AMD does not believe it is necessary to outperform Nvidia to thrive in the market.

“It is evident that Nvidia currently holds the majority of the market,” Su stated to reporters, specifically referring to the AI chip market. “We anticipate this market to exceed $400 billion by 2027, and we are confident in securing a significant portion of it.”