Table of Contents

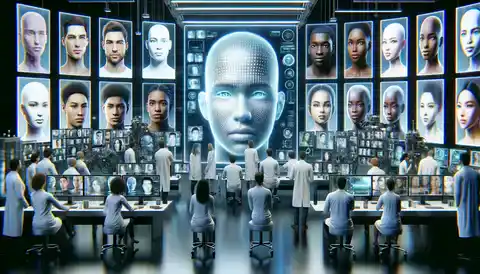

Studies have revealed that photographs of white faces created by AI Generated are deemed to be “more authentic” compared to real human faces.

A recent study conducted by a group of researchers from Australia, the U.K., and the Netherlands has revealed that individuals tend to perceive AI-generated images of white faces as more human-like compared to photographs of actual people.

Recent evidence indicates that AI generated faces have reached a level of realism that makes them virtually indistinguishable from human faces. However, it has been observed that the algorithms used to train these AI systems are biased towards White faces, resulting in White AI faces appearing exceptionally realistic.

AI Generated Experiments

In Experiment 1, which involved 124 adults, we not only reanalyzed previously published data but also demonstrated that White AI faces are more frequently perceived as human compared to actual human faces. This phenomenon, which we refer to as AI hyperrealism, was found to be paradoxical as the individuals who made the most errors in this task were also the most confident, a phenomenon known as the Dunning-Kruger effect.

In Experiment 2, which included 610 adults, we employed face-space theory and analyzed qualitative reports from participants to identify specific facial attributes that distinguish AI faces from human faces. Interestingly, these attributes were misinterpreted by participants, leading to the perception of AI hyperrealism.

However, machine learning techniques were able to accurately identify these attributes, highlighting the potential for high accuracy in distinguishing AI from human faces. These findings demonstrate how psychological theory can contribute to our understanding of AI outputs and offer guidance for mitigating biases in AI algorithms, thereby promoting the ethical use of AI.

Ethical Implications of Bias in AI-Generated Faces

The emergence of the AI revolution has brought about a significant societal transformation (Xie, 2023). A notable aspect of this transformation is the creation of AI generated faces that closely resemble humans, which has raised public apprehension regarding the potential distortion of truth by AI (Devlin, 2023).

The study revealed that approximately 66% of AI generated photos with white faces were identified as human, but this was not the case for people of color. This could be because the algorithm used to create the AI faces was primarily trained on images of white individuals.

Dr. Amy Dawel from the Australian National University’s College of Health and Medicine department suggests that this is because the biases that already exist in the world are being incorporated into the algorithms during training.

Out of the 100 real human faces and 100 AI generated faces randomly presented to the participants, it was observed that 61% of the time, the AI images were mistaken for real people. However, the real human faces were only perceived as real 51% of the time.

Scientists have observed that AI-generated faces now possess a heightened level of realism, surpassing that of human faces. This phenomenon, referred to as “hyperrealism,” has led to a significant challenge in distinguishing between genuine and deepfaked faces. Consequently, individuals often remain oblivious to the deception and confidently perceive these fabricated faces as authentic.

Perceived Authenticity of AI-Generated Faces: Impact and Deception

According to Elizabeth Miller, a co-author of the study from The Australian National University, it is alarming that individuals who believed that the AI-generated faces were genuine were paradoxically the most self-assured in their assessments. The researchers assert that their discoveries have significant implications in the real world, particularly in the areas of identity theft and misinformation, as it is possible for people to be easily deceived by deepfaked digital impostors.

People who are being deceived by AI imposters into believing they are interacting with real individuals are unaware of the deception taking place.

For more AI News updates click Insights.